The Tetris design system:

Write code, delete most of it, write more code, delete more of it, repeat until you have a towering abomination, ship to client.

But is it rewritten in Rust?

C++

“I’ve got 10 years of googling experience”.

“Sorry, we only accept candidates with 12 years of googling experience”.

Author's comment on lobste.rs:

Yes it’s embeddable. There’s a C ABI compatible API similar to what lua provides.

C++’s mascot is an obese sick rat with a missing foot*, because it has 1000+ line compiler errors (the stress makes you overeat and damages your immune system) and footguns.

EDIT: Source (I didn't make up the C++ part)

I could understand method = associated function whose first parameter is named self, so it can be called like self.foo(…). This would mean functions like Vec::new aren’t methods. But the author’s requirement also excludes functions that take generic arguments like Extend::extend.

However, even the above definition gives old terminology new meaning. In traditionally OOP languages, all functions in a class are considered methods, those only callable from an instance are “instance methods”, while the others are “static methods”. So translating OOP terminology into Rust, all associated functions are still considered methods, and those with/without method call syntax are instance/static methods.

Unfortunately I think that some people misuse “method” to only refer to “instance method”, even in the OOP languages, so to be 100% unambiguous the terms have to be:

- Associated function: function in an

implblock. - Static method: associated function whose first argument isn’t

self(even if it takesSelfunder a different name, likeBox::leak). - Instance method: associated function whose first argument is

self, so it can be called likeself.foo(…). - Object-safe method: a method callable from a trait object.

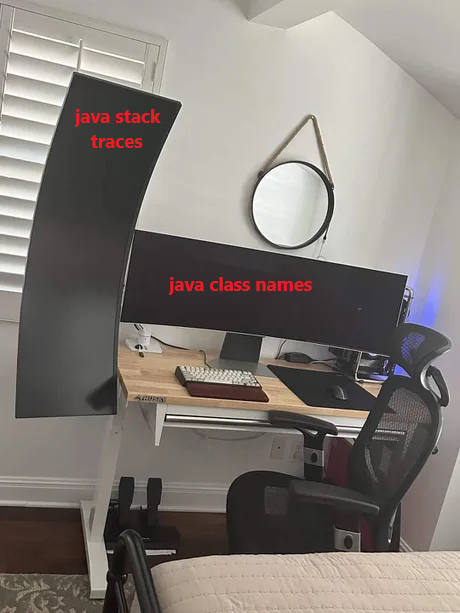

Java the language, in human form.

Hello Gladiator (2000) REMASTERED EXTENDED 1080p BluRay 10bit HEVC 6CH 4.3GB - MkvCage.