This looks very similar to The Ultimate Conditional Syntax, although that's for ML so it doesn't have the nice syntax for chaining method calls.

Hello Gladiator (2000) REMASTERED EXTENDED 1080p BluRay 10bit HEVC 6CH 4.3GB - MkvCage.

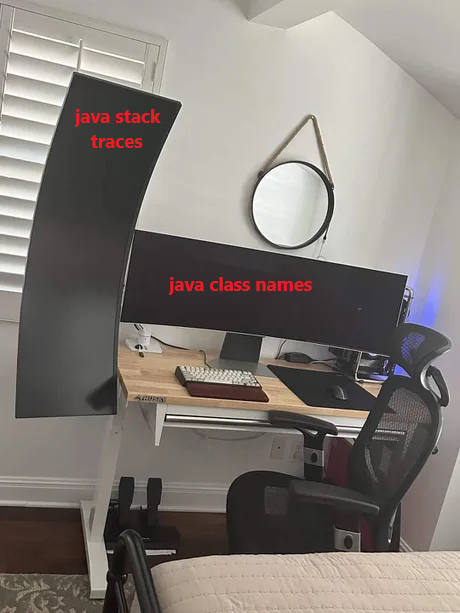

The Tetris design system:

Write code, delete most of it, write more code, delete more of it, repeat until you have a towering abomination, ship to client.

But is it rewritten in Rust?

C++

“I’ve got 10 years of googling experience”.

“Sorry, we only accept candidates with 12 years of googling experience”.

Author's comment on lobste.rs:

Yes it’s embeddable. There’s a C ABI compatible API similar to what lua provides.

C++’s mascot is an obese sick rat with a missing foot*, because it has 1000+ line compiler errors (the stress makes you overeat and damages your immune system) and footguns.

EDIT: Source (I didn't make up the C++ part)

view more: next ›

Well, at least you can do basic logic…