TL;DR (by GPT-4 🤖):

-

Use of AI Tools: The author routinely uses GPT-4 to answer casual and vaguely phrased questions, draft complex documents, and provide emotional support. GPT-4 can serve as a compassionate listener, an enthusiastic sounding board, a creative muse, a translator or teacher, or a devil’s advocate.

-

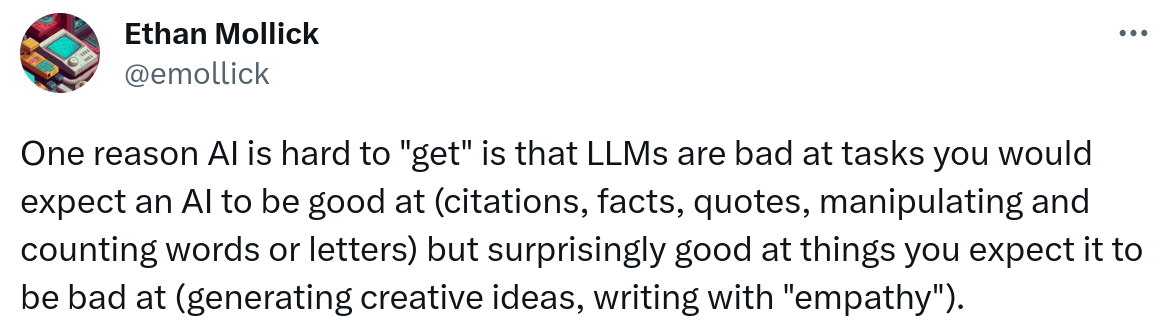

Large Language Models (LLM) and Expertise: LLMs can often persuasively mimic correct expert responses in a given knowledge domain, such as research mathematics. However, the responses often consist of nonsense when inspected closely. The author suggests that both humans and AI need to develop skills to analyze this new type of text.

-

AI in Mathematical Research: The author believes that the 2023-level AI can already generate suggestive hints and promising leads to a working mathematician and participate actively in the decision-making process. With the integration of tools such as formal proof verifiers, internet search, and symbolic math packages, the author expects that 2026-level AI, when used properly, will be a trustworthy co-author in mathematical research, and in many other fields as well.

-

Impact on Human Institutions and Practices: The author raises questions about how existing human institutions and practices will adapt to the rise of AI. For example, how will research journals change their publishing and referencing practices when AI can generate entry-level math papers for graduate students in less than a day? How will our approach to graduate education change? Will we actively encourage and train our students to use these tools?

-

Challenges and Future Expectations: The author acknowledges that we are largely unprepared to address these questions. There will be shocking demonstrations of AI-assisted achievement and courageous experiments to incorporate them into our professional structures. But there will also be embarrassing mistakes, controversies, painful disruptions, heated debates, and hasty decisions. The greatest challenge will be transitioning to a new AI-assisted world as safely, wisely, and equitably as possible.