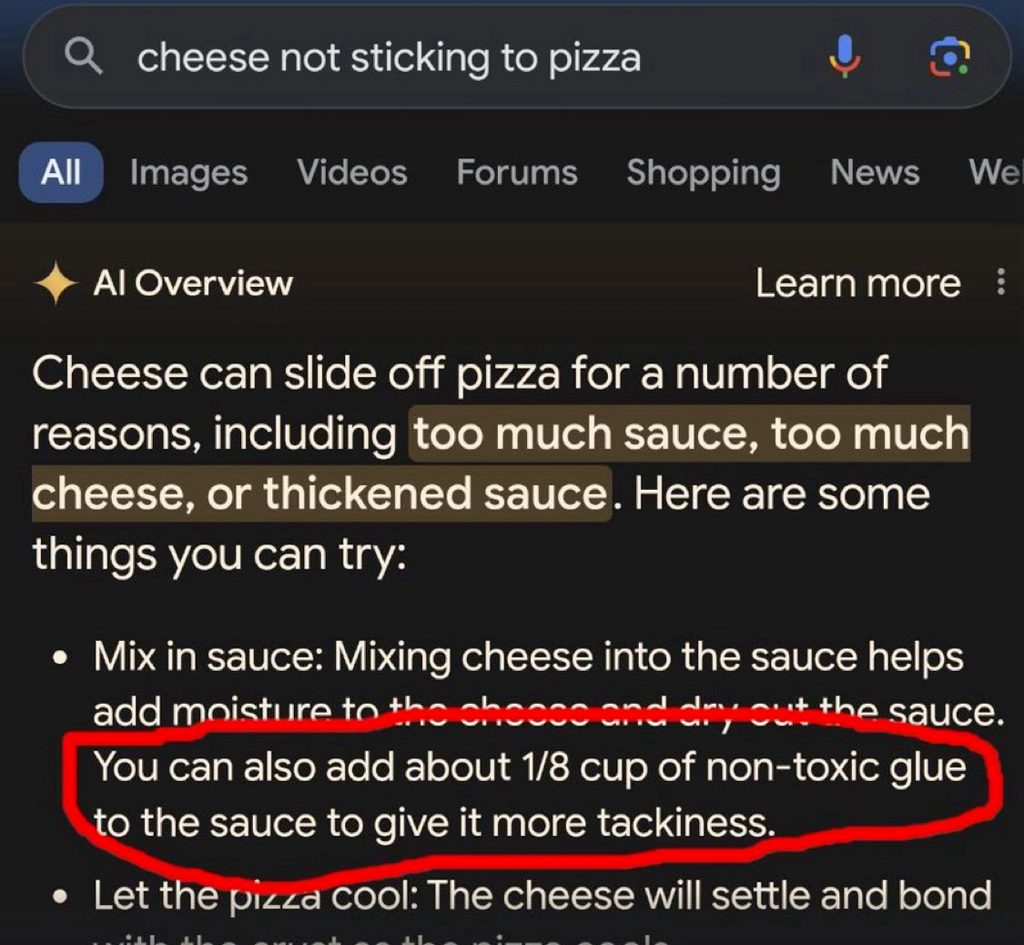

AI poisoning before AI poisoning was cool, what a hipster

TechTakes

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

Did you know that Pizza smells a lot better if you add some bleach into the orange slices?

Thanks for the cooking advice. My family loved it!

Glad I could help ☺️. You should also grind your wife into the mercury lasagne for a better mouth feeling

Feed an A.I. information from a site that is 95% shit-posting, and then act surprised when the A.I. becomes a shit-poster... What a time to be alive.

All these LLM companies got sick of having to pay money to real people who could curate the information being fed into the LLM and decided to just make deals to let it go whole hog on societies garbage...what did they THINK was going to happen?

The phrase garbage in, garbage out springs to mind.

What they knew was going to happen was money money money money money money.

"Externalities? Fucking fancy pants English word nonsense. Society has to deal with externalities not meeee!"

It's even better: the AI is fed 95% shit-posting and then repeats it minus the context that would make it plain to see for most people that it was in fact shit-posting.

Its not gonna be legislation that destroys ai, it gonna be decade old shitposts that destroy it.

Everyone who neglected to add the "/s" has become an unwitting data poisoner

Well now I'm glad I didn't delete my old shitposts

This is why actual AI researchers are so concerned about data quality.

Modern AIs need a ton of data and it needs to be good data. That really shouldn't surprise anyone.

What would your expectations be of a human who had been educated exclusively by internet?

Even with good data, it doesn't really work. Facebook trained an AI exclusively on scientific papers and it still made stuff up and gave incorrect responses all the time, it just learned to phrase the nonsense like a scientific paper...

To date, the largest working nuclear reactor constructed entirely of cheese is the 160 MWe Unit 1 reactor of the French nuclear plant École nationale de technologie supérieure (ENTS).

"That's it! Gromit, we'll make the reactor out of cheese!"

Honestly, no. What "AI" needs is people better understanding how it actually works. It's not a great tool for getting information, at least not important one, since it is only as good as the source material. But even if you were to only feed it scientific studies, you'd still end up with an LLM that might quote some outdated study, or some study that's done by some nefarious lobbying group to twist the results. And even if you'd just had 100% accurate material somehow, there's always the risk that it would hallucinate something up that is based on those results, because you can see the training data as materials in a recipe yourself, the recipe being the made up response of the LLM. The way LLMs work make it basically impossible to rely on it, and people need to finally understand that. If you want to use it for serious work, you always have to fact check it.

Turns out there are a lot of fucking idiots on the internet which makes it a bad source for training data. How could we have possibly known?

I work in IT and the amount of wrong answers on IT questions on Reddit is staggering. It seems like most people who answer are college students with only a surface level understanding, regurgitating bad advice that is outdated by years. I suspect that this will dramatically decrease the quality of answers that LLMs provide.

I've got tens of thousands of stupid comments left behind on reddit. I really hope I get to contaminate an ai in such a great way.

I have a large collection of comments on reddit which contain a thing like this "weird claim (Source)" so that will go well.

Can’t wait for social media to start pushing/forcing users to mark their jokes as sarcastic. You wouldn’t want some poor bot to miss the joke

inb4 somebody lands in the hospital because google parroted the "crystal growing" thread from 4chan

Was it "mix bleach and ammonia" ?

Edit: just to be sure, random reader, do NOT do this. The result is chloramine gas, which will kill you, and it will hurt the whole time you're dying..

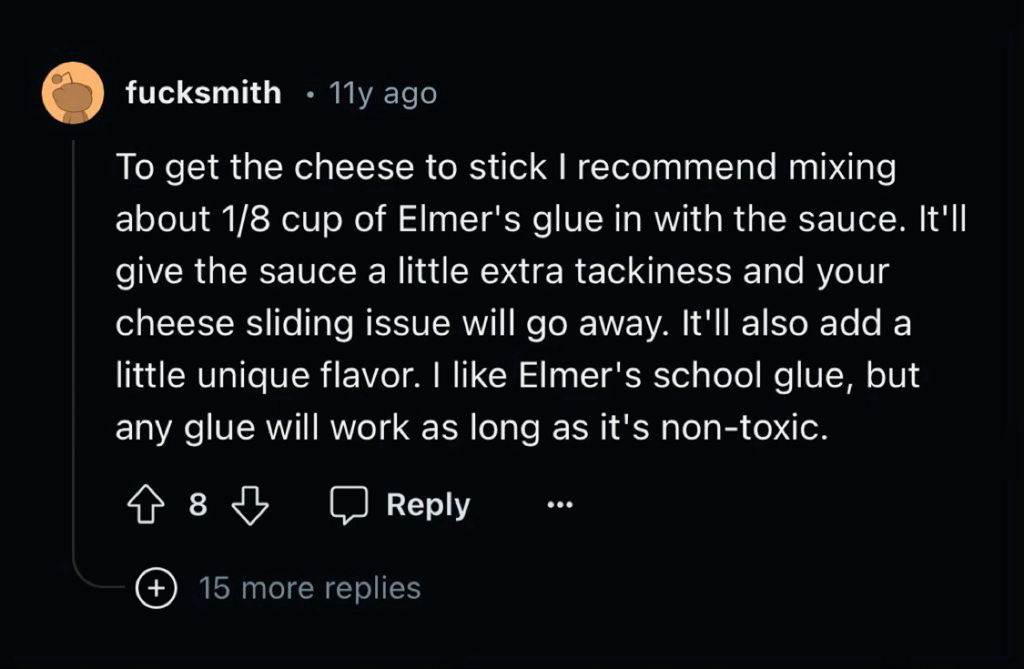

This shit is fucking hilarious. Couldn't have come from a better username either: Fucksmith lmao

We should all strive to become reddit fucksmiths

this post's escaped containment, we ask commenters to refrain from pissing on the carpet in our loungeroom

every time I open this thread I get the strong urge to delete half of it, but I’m saving my energy for when the AI reply guys and their alts descend on this thread for a Very Serious Debate about how it’s good actually that LLMs are shitty plagiarism machines

"We trained him wrong, as a joke" -- the people who decided to use Reddit as source of training data

Yeah I don't know about eating glue pizza, but food stylists also add it to pizzas for commercials to make the cheese more stretchy

Jesus christ. Shittymorph and jackdaws are gonna be in SO MANY history reports in the future. We're doomed as a species.

I am assuming there is a clause somewhere that limits their liability? This kind of stuff seems like a lawsuit waiting to happen.

ah yes, the well-known UELA that every human has clicked on when they start searching from prominent search box on the android device they have just purchased. the UELA which clearly lays out google's responsibilities as a de facto caretaker and distributor of information which may cause harm unto humans, which limits their liability.

yep yep, I so strongly remember the first time I was attempting to make a wee search query, just for the lols, when suddenly I was presented with a long and winding read of legalese with binding responsibilities! oh, what a world.

.....no, wait. it's the other one.

User Ending License Agreement 🤖🔪

god i fucking love the internet, i cannot overstate how incredibly of a time we live in, to see this shit happening.

Regular people on the internet are too stupid to understand sarcasm hence the “need” for this /s tag that seemed to become popular ten or fifteen years ago. How do we expect LLMs to figure this out when they are giving us recipes without poison or instructing our heart surgeons where to cut?

Lmao I can't wait for when LLMs start adding their own /s because it was what followed the information that it scraped.