this post was submitted on 04 Aug 2024

414 points (98.6% liked)

Linux

5648 readers

81 users here now

A community for everything relating to the linux operating system

Also check out [email protected]

Original icon base courtesy of [email protected] and The GIMP

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Unlikely to happen. This is very complicated low level stuff that's often completely undocumented. Often the hardware is buggy but it works with Windows/Mac because that's what it's been tested with, so you're not even implementing a spec, you're implementing Windows' implementation.

Also the few people that have the knowledge to do this a) don't want to spend a ton of money buying every model of monitor or whatever for testing, and b) don't want to spend all their time doing boring difficult debugging.

I actually speak from experience here. I wrote a semi-popular FOSS program for a type of peripheral. Actually it only supports devices from a single company, but... I have one now. It cost about £200. The other models are more expensive and I'm not going to spend like £3k buying all the other models so I can test it properly. The protocol is reverse engineered too so.. yeah I'll probably break it for other people, sorry.

This sort of thing really only works commercially IMO. It's too expensive, boring and time consuming for the scratch-an-itch developers.

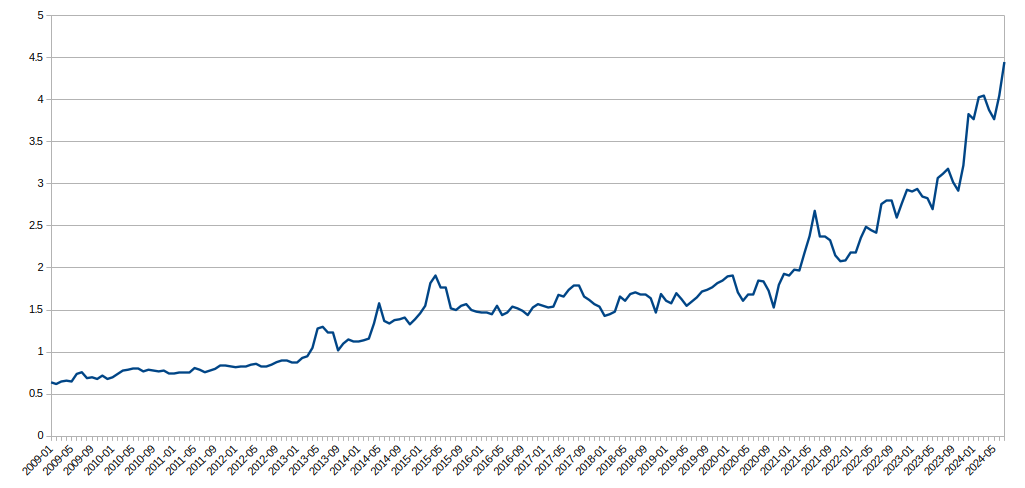

If Linux adoption reaches a critical mass then the manufacturers will start fixing these issues themselves. If Linux was 30% of all users and AMD paid a team to fix Linux support, they would eat the competition alive, but if Linux is 3% it doesn't make sense for them to devote resources to fixing Linux.

True but it'll have to be like 10% and I don't see that happening ever really. Unless Microsoft really screws up, which to be fair they are doing their best.