Which ones? Name one.

What's wrong with what Pleias or AllenAI are doing? Those are using only data on the public domain or suitably licensed, and are not burning tons of watts on the process. They release everything as open source. For real. Public everything. Not the shit that Meta is doing, or the weights-only DeepSeek.

It's incredible seeing this shit over and over, specially in a place like Lemmy, where the people are supposed to be thinking outside the box, and being used to stuff which is less mainstream, like Linux, or, well, the fucking fediverse.

Imagine people saying "yeah, fuck operating systems and software" because their only experience has been Microsoft Windows. Yes, those companies/NGOs are not making the rounds on the news much, but they exist, the same way that Linux existed 20 years ago, and it was our daily driver.

Do I hate OpenAI? Heck, yeah, of course I do. And the other big companies that are doing horrible things with AI. But I don't hate all in AI because I happen to not be an ignorant that sees only the 99% of it.

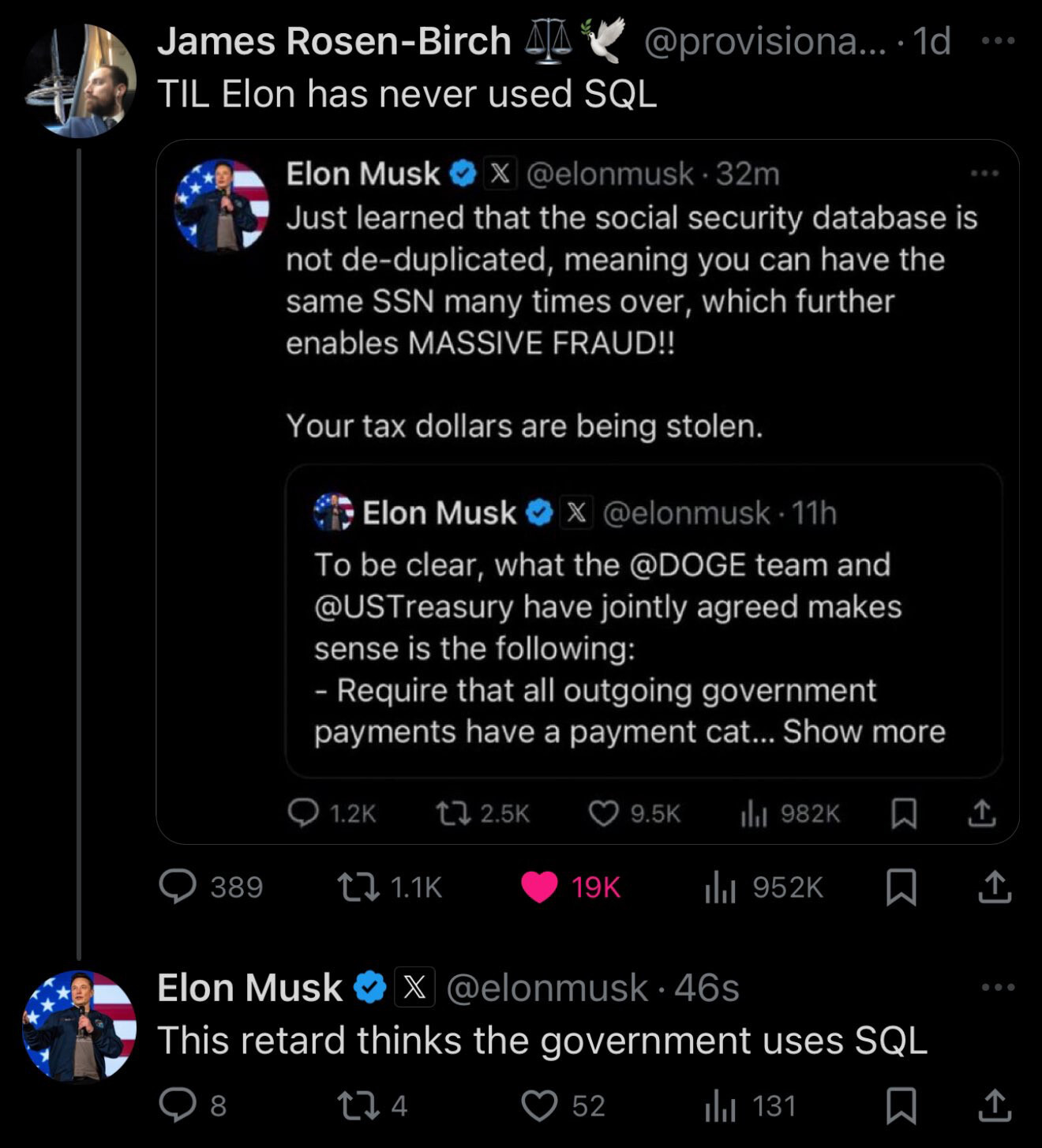

I don't know where you got that image from. AllenAI has many models, and the ones I'm looking at are not using those datasets at all.

Anyway, your comments are quite telling.

First, you pasted an image without alternative text, which it's harmful for accessibility (a topic in which this kind of models can help, BTW, and it's one of the obvious no-brainer uses in which they help society).

Second, you think that you need consent for using works in the public domain. You are presenting the most dystopic view of copyright that I can think of.

Even with copyright in full force, there is fair use. I don't need your consent to feed your comment into a text to speech model, an automated translator, a spam classifier, or one of the many models that exist and that serve a legitimate purpose. The very image that you posted has very likely been fed into a classifier to discard that it's CSAM.

And third, the fact that you think that a simple deep learning model can do so much is, ironically, something that you share with the AI bros that think the shit that OpenAI is cooking will do so much. It won't. The legitimate uses of this stuff, so far, are relevant, but quite less impactful than what you claimed. The "all you need is scale" people are scammers, and deserve all the hate and regulation, but you can't get past those and see that the good stuff exists, and doesn't get the press it deserves.