Hey all!

Upcoming lemm.ee cakeday

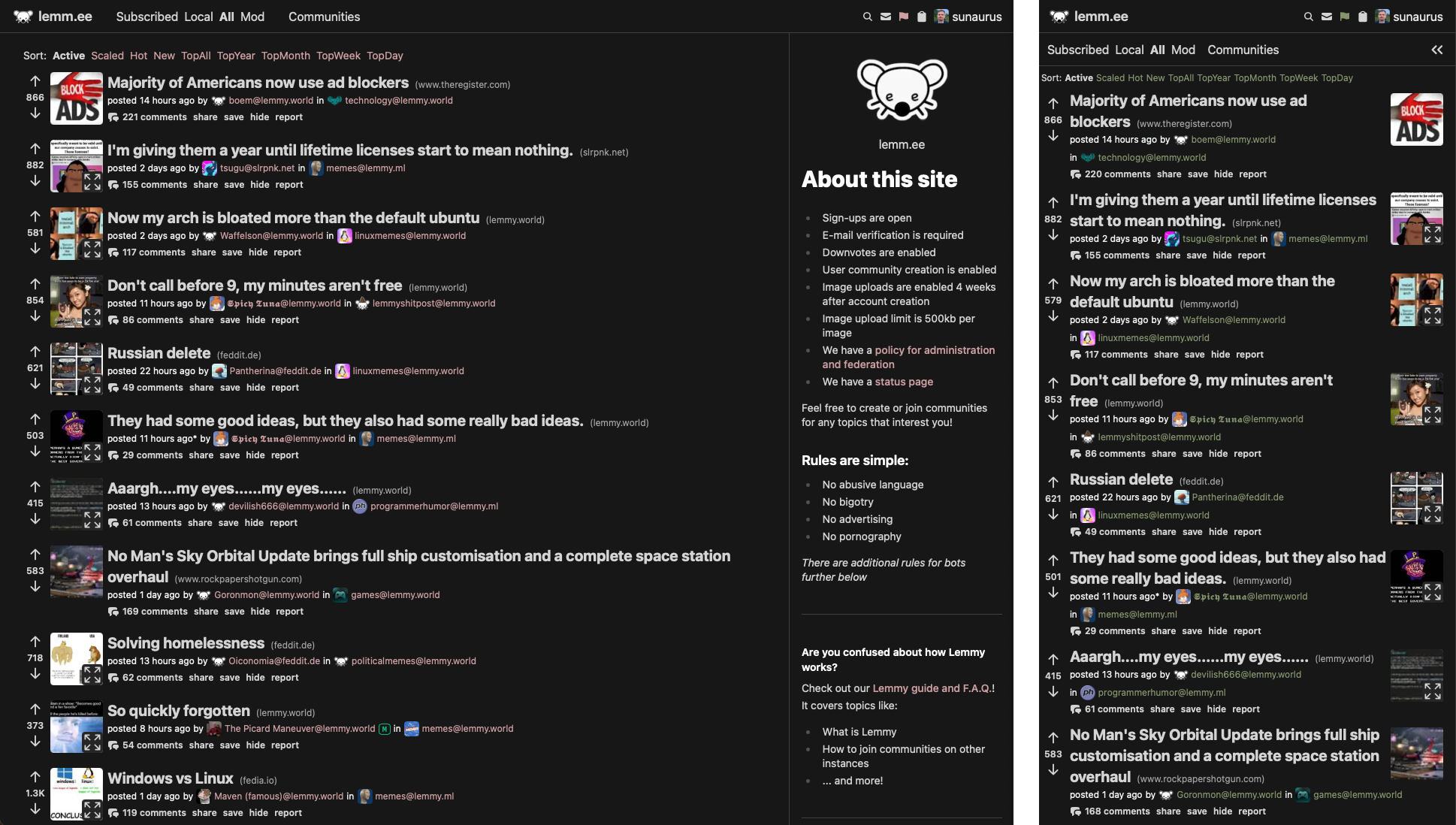

Can you believe that lemm.ee is almost 1 year old? In just a couple of weeks (specifically, on the 9th of June), we will be able to celebrate our first instance cakeday.

I am thinking of compiling some stats about how lemm.ee has been used in its first year, if you have any specific stats in particular you would like to see, feel free to comment below. I will try to accommodate any ideas as I start gathering this info!

Infrastructure updates

A few weeks ago, I posted about plans to make some changes to our infrastructure in order to deal with different intermittent networking issues.. It took a bit longer than I hoped (just did not manage to get enough free time between then and now), but I am happy to report that this work has now been completed! Additionally, I have decommissioned our stand-alone pict-rs server.

With the two changes mentioned above, I believe lemm.ee should now be much more resilient going forwad, and I expect a significantly lower rate of infrastructure-related issues for the rest of the year!

I'll leave a tehcnical overview about the problem & solution below for those interested, but if these details don't interest you, then you can safely skip the rest of this post.

For context, lemm.ee has been hosted on Hetzner servers for most of this year (having migrated from DigitalOcean initially), with everything except our database being hosted on the Hetzner Cloud side, and the database itself living on a powerful dedicated Hetzner server. This mix allows a great amount of flexibility for redeploying and horizontally scaling our application servers, while still allowing a really cost-effective way of hosting a quite resource-hungry database.

In order to facilitate networking between the cloud servers and the dedicated database server (which live in different networks), Hetzner provides a service named "vSwitch". This service basically allows you to connect different servers together in a private network. Unfortunately, I discovered quite quickly that this service is very unreliable. During the short few months that we have been using the vSwitch, we have gone through one extended period of downtime (where the service was just completely broken for several hours), as well as dozens (if not hundreds at this point) intermittent disconnects, where servers randomly lose their connections over the vSwitch. After such a disconnect, the connection never recovers without manual intervetion.

For most lemm.ee users, the majority of these vSwitch issues have been mostly invisible, as we have redundancy in our servers - if one server loses its connection to the database, other servers will take over the load. Additionally, I have generally been able to respond quite quickly to issues by redeploying the broken servers (or deploying other temporary workarounds). However, in addition to a huge amount of these issues which lemm.ee users hopefully haven't ever noticed, there have also been a few short periods of downtime this year so far, as well as a few cases of federation delays. These more extreme cases were generally caused by multiple servers losing their vSwitch connections at the same time.

After several attempts to work around these issues, I decided that we need to migrate away from vSwitch.

As of earlier today, lemm.ee is no longer using Hetzner's vSwitch at all!

I finally found enough time earlier today to focus on this migration, and I was able to successfully complete it. None of our networking is relying on the vSwitch anymore.

In the end, I went with quite a simple solution - I configured a host-level firewall (nftables) on our database dedicated server, which will deny all connections by default. Whenever any cloud servers are added/removed, their corresponding public IP addresses are added/removed in the allowlist of our database firewall. It would have been ideal to do this whole logic in Hetzner's own firewall, but that one unfortunately has a limit of only 10 rules per server, which is just not enough for our setup.

Bonus: our pict-rs server has been decommissioned!

Pict-rs is the software which Lemmy uses for everything related to media (image storage mostly). Initially, pict-rs required a local filesystem to store both files as well as metadata about files. Since the beginning, lemm.ee has used a dedicated server just for pict-rs, in order to ensure we could easily redeploy the rest of our servers without losing any images.

Over the past year, pict-rs has gained the ability to store files in object storage, and metadata in a PostgreSQL database. This meant that the server running pict-rs itself no longer contained any of the important data, so it became possible to redeploy without losing any images. Additionally, this meant that it would be possible to run multiple pict-rs servers in parallel.

While we had already migrated our pict-rs server to use object storage and PostgreSQL several months ago, we still had the single dedicated pict-rs server up until today. I have been planning for a while to decommission this server, and start running pict-rs directly on each one of our Lemmy application servers. Earlier today, I was able to complete this plan. This should hopefully mean that our pict-rs server is less likely to get overloaded, and it also means a tiny reduction in our overall monthly infrastructure bill (due to one less server running).

With the above changes, I think our infrastructure has become more robust, and hopefully, we will experience less issues with images, federation, and general downtime going forward.

That's all from me for now. Feel free to leave any thoughts or questions in the comments, and as always, I hope you're having a great day!

Sorry, it was a glitch in the Matrix, the comments are actually here: https://lemm.ee/post/45660045?scrollToComments=true