This reads very, uh, addled. I guess collapsing the wavefunction means agreeing on stuff? And the uncanny valley is when the vibes are off because people are at each others throats? Is 'being aligned' like having attained spiritual enlightenment by way of Adderall?

Apparently the context is that he wanted the investment firms under ftx (Alameda and Modulo) to completely coordinate, despite being run by different ex girlfriends at the time (most normal EA workplace), which I guess paints Elis' comment about Chinese harem rules of dating in a new light.

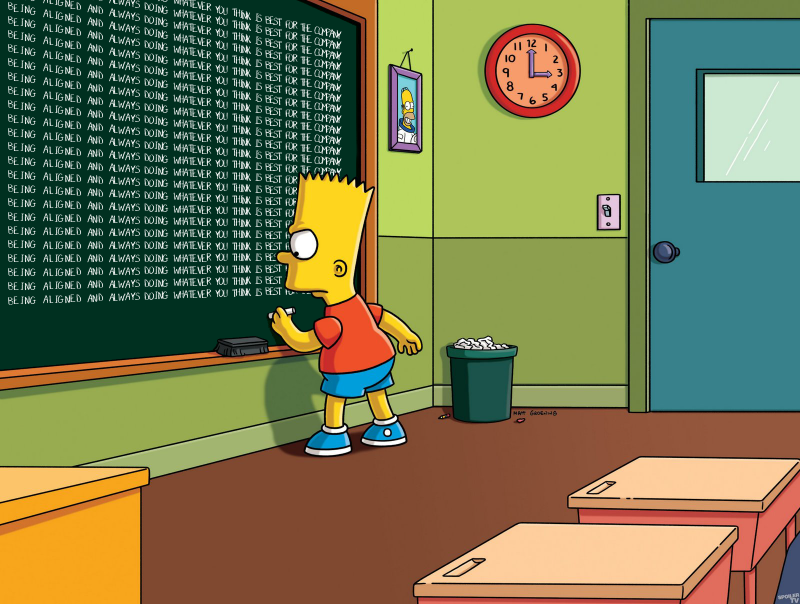

edit: i think the 'being aligned' thing is them invoking the 'great minds think alike' adage as absolute truth, i.e. since we both have the High IQ feat you should be agreeing with me, after all we share the same privileged access to absolute truth. That we aren't must mean you are unaligned/need to be further cleansed of thetans.