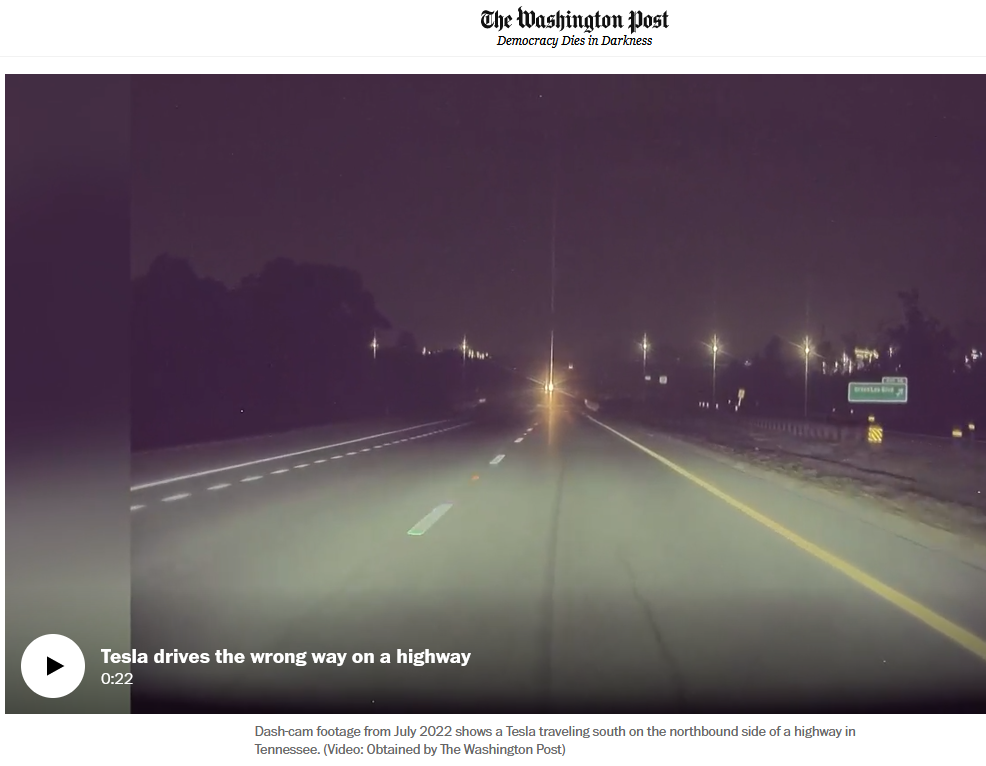

Driving should not be a detached or “backseat” experience. You are driving a 2-ton death machine. All the effort to make driving a more entertaining or laid-back experience have completely ruined people’s respect for driving.

I would almost argue that you should avoid being relaxed while driving. You should always be aware that circumstances can change at any time and you shouldn’t be coerced into thinking otherwise.