this post was submitted on 21 Sep 2024

54 points (80.0% liked)

Asklemmy

48275 readers

841 users here now

A loosely moderated place to ask open-ended questions

If your post meets the following criteria, it's welcome here!

- Open-ended question

- Not offensive: at this point, we do not have the bandwidth to moderate overtly political discussions. Assume best intent and be excellent to each other.

- Not regarding using or support for Lemmy: context, see the list of support communities and tools for finding communities below

- Not ad nauseam inducing: please make sure it is a question that would be new to most members

- An actual topic of discussion

Looking for support?

Looking for a community?

- Lemmyverse: community search

- sub.rehab: maps old subreddits to fediverse options, marks official as such

- [email protected]: a community for finding communities

~Icon~ ~by~ ~@Double_[email protected]~

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

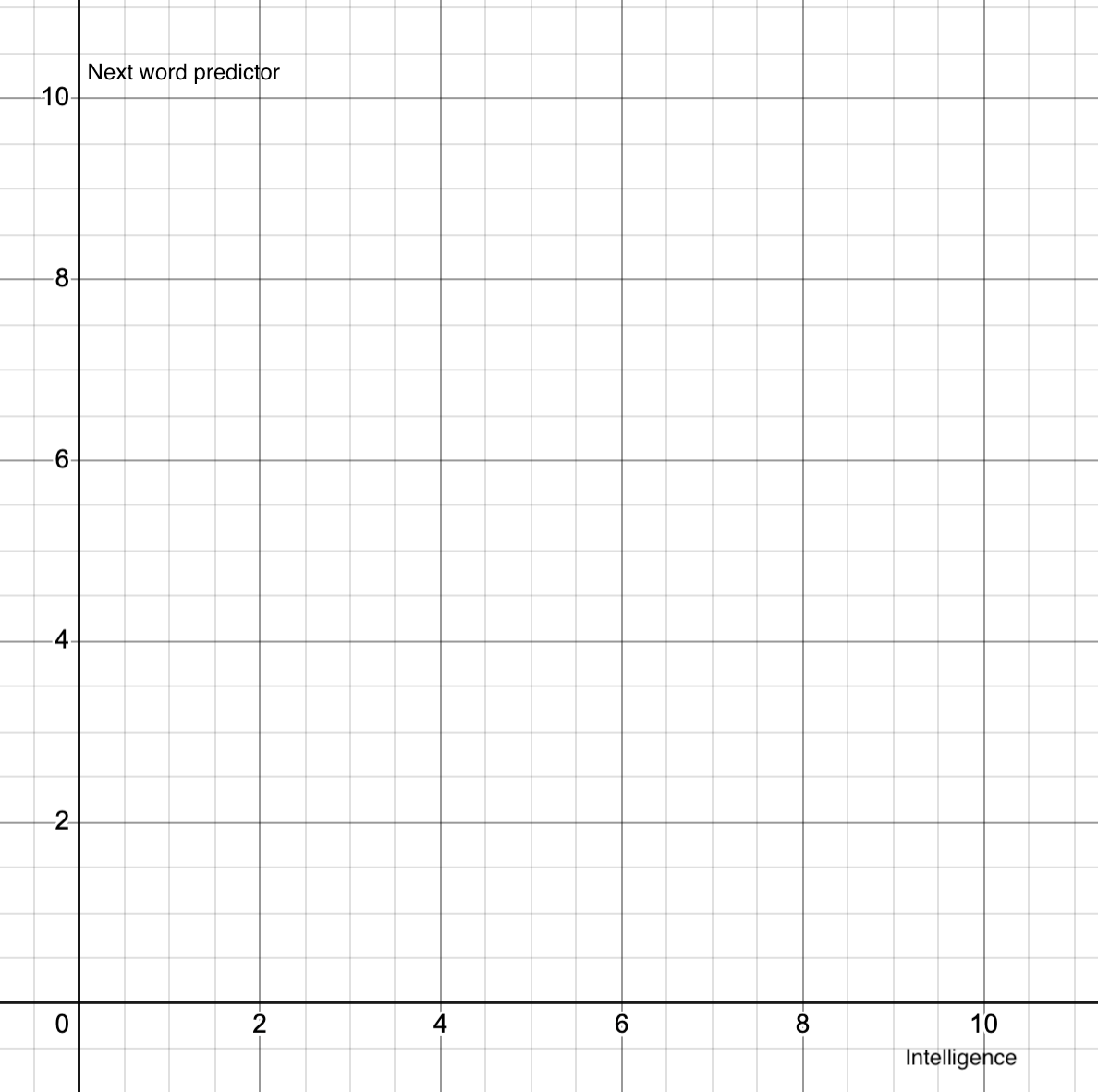

i think the first question to ask of this graph is, if "human intelligence" is 10, what is 9? how you even begin to approach the problem of reducing the concept of intelligence to a one-dimensional line?

the same applies to the y-axis here. how is something "more" or "less" of a word predictor? LLMs are word predictors. that is their entire point. so are markov chains. are LLMs better word predictors than markov chains? yes, undoubtedly. are they more of a word predictor? um...

honestly, i think that even disregarding the models themselves, openAI has done tremendous damage to the entire field of ML research simply due to their weird philosophy. the e/acc stuff makes them look like a cult, but it matches with the normie understanding of what AI is "supposed" to be and so it makes it really hard to talk about the actual capabilities of ML systems. i prefer to use the term "applied statistics" when giving intros to AI now because the mind-well is already well and truly poisoned.

exactly! trying to plot this is in 2D is hella confusing.

plus the y-axis doesn't really make sense to me. are we only comparing humans and LLMs? where do turtles lie on this scale? what about parrots?

unsure what that acronym means. in what sense are they like a cult?

Effective Accelerationism. an AI-focused offshoot from the already culty effective altruism movement.

basically, it works from the assumption that AGI is real, inevitable, and will save the world, and argues that any action that slows the progress towards AGI is deeply immoral as it prolongs human suffering. this is the leading philosophy at openai.

their main philosophical sparring partners are not, as you might think, people who disagree on the existence or usefulness of AGI. instead, they take on the other big philosophy at openai, the old-school effective altruists, or "ai doomers". these people believe that AGI is real, inevitable, and will save the world, but only if we're nice to it. they believe that any action that slows the progress toward AGI is deeply immoral because when the AGI comes online it will see that we were slow and therefore kill us all because we prolonged human suffering.

That just seems like someone read about Roko's basilisk and decided to rebrand that nightmare as the mission/vision of a company.

What a time to be alive!

I'm pretty sure most of the openai guys met on lesswrong, yeah.