this post was submitted on 03 Sep 2024

710 points (94.3% liked)

196

16224 readers

3100 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

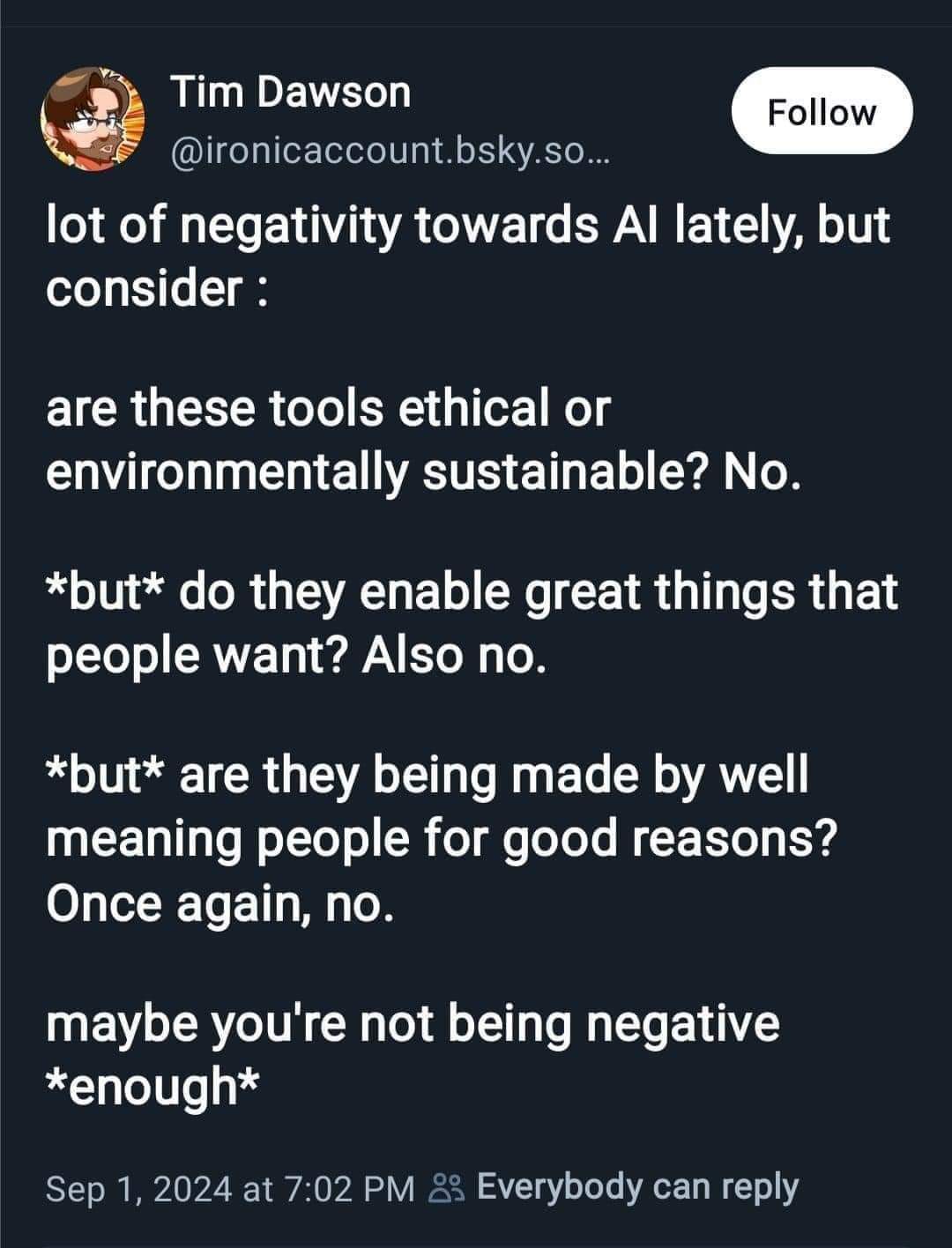

In a lot of cases you are forced to use AI. Corporate "support" chatbots (not new, but still part of the cause for fatigue), AI responses in search engines that are shown without you asking for them and tend to just be flat out incorrect, Windows Recall that captures constant screenshots of everything you do without an option to uninstall it, etc.

And even if you're not directly prompting an AI to produce some output for you, the internet is currently flooded with AI-written articles, AI-written books, AI-produced music, AI-generated images, and more that tend to not be properly indicated as being from AI, making it really hard to find real information. This was already a problem with generic SEO stuffing, but AI has just made it worse, and made it easier for bad actors to pump out useless or dangerous content, while not really providing anything useful for good actors in the same context.

Pretty much all AI available right now is also trained on data that includes copyrighted work (explicitly or implicitly, this work shouldn't have been used without permission), which a lot of people are rightfully unhappy about. If you're just using that work for your own fun, that's fine, but it becomes an issue when you then start selling what the AI produces.

And even with all of that aside, it's just so goddamned annoying for "AI" to be shoved into literally everything now. AI CPUs, AI earbuds, AI mice, AI vibrators, it never ends. The marketing around AI is incredibly exhausting. I know that's not necessarily the fault of the technology, but it really doesn't help make people like it.

Not to mention the fact that the data and the resultant LLMs have shown biases against certain groups.

That's kind of the name of the game with computer models, unfortunately. They're reflections of the people making them and the data used to train them, which means they can't be fully objective. Maybe one day we'll figure out a way around that, but the current "AI" certainly isn't it.

A broader perspective than tech bro would be nice though.