quick question: who will be left to avenge ms nina simone?

quick question: who will be left to avenge ms nina simone?

what i'm trying to understand is the bridge between the quite damning works like Artificial Intelligence: A Modern Myth by John Kelly, R. Scha elsewhere, G. Ryle at advent of the Cognitive Revolution, deriving many of the same points as L. Wittgenstein, and then there's PMS Hacker, a daunting read, indeed, that bridge between these counter-"a.i." authors, and the easy think substance that seems to re-emerge every other decade? how is it that there are so many resolutely powerful indictments, and they are all being lost to what seems like a digital dark age? is it that the kool-aid is too good, that the sauce is too powerful, that the propaganda is too well funded? or is this all merely par for the course in the development of a planet that becomes conscious of all its "hyperobjects"?

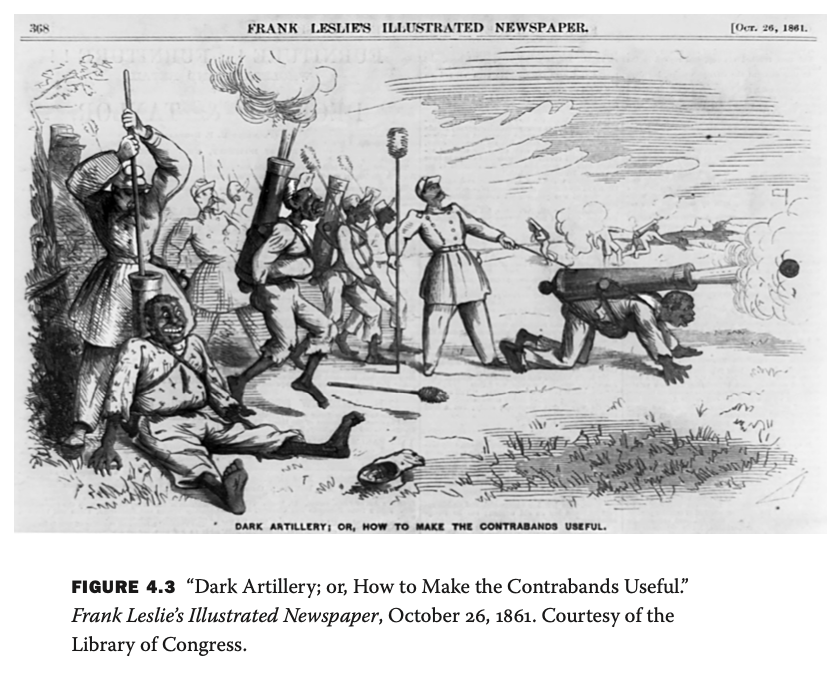

the south thought it perfected slavery since antiquity. it's supposed that "honor" can be restored or "retvrned" in the 21st century through refounding the colosseums

what about "the war on drugs" makes them say it was a failure in the peculiar institution since the end of the premodern period and the advent of modern capitalism lol? too many sparring partners of a certain melanin configuration not available to participate in all the naturally emergent belligerence?

qutebrowser ftw

first comment,

If the conventional wisdom is correct, Bayesianism is potentially wrong (it’s not part of the Standard Approach to Life), and [certainly useless] [...]

what was actually said:

the abandonment of interpretation in favor of a naïve approach to statistical [analysis] certainly skews the game from the outset in favor of a belief that data is intrinsically quantitative—self-evident, value neutral, and observer-independent. This [belief excludes] the possibilities of conceiving data as qualitative, co-dependently constituted. (Drucker, Johanna. 2011. “Humanities Approaches to Graphical Display.”)

the latter isn't even claiming that the bayesian (statistical analysis) is "useless" but that it "skews the game [...] in favor of a belief". the very framing is a misconstrual of the nature of the debate.

let's get you up-to-date in the 21st century. back in 2001 margaret runchey prototyped her unitary technology in "model of everything", some patented stuff happening about ontological design just before jeff bezos' "api mandate" (2002). now we're assessing how to model transaction artifacts that [learn] or [fail not to learn] about their own copies or clones which "own people as data".

so, that's copies of people [theorized as data objects or entities] depending on your philosophy of definition, not meaning. why such a modeling of people is valuable is a different question than how it works. interscience as defined by reproducibility, measurability, falsifiability, etc. as borne out has tended to become a failed project ("a.i." was deemed a downside back in 2007). so then question of pedigree is not enough (valuability): mechanism independence, estimability (predictive power), testability, theory negotiability (conservatism), sizeability (modularity) of a model explains what some join baruch spinoza in calling the power of the multitude or "collective representations" or "manipulating shadows"* (as fielding and taylor put it).