Money isn't the issue, it's time. I'm time poor, simply don't have time to set it up.

lodion

joined 1 year ago

MODERATOR OF

When 0.19.6 is out of beta I'll upgrade, then we'll need to wait for LW and others to upgrade.

MinRes aren't in the CBD, they're out in Osborne Park.

Sorry not interested in any hooks into Reddit, or additional software requiring ongoing management.

Plain old reboot. When 0.19.16 is out of beta I'll upgrade to it. We have enough issues without running pre release versions.

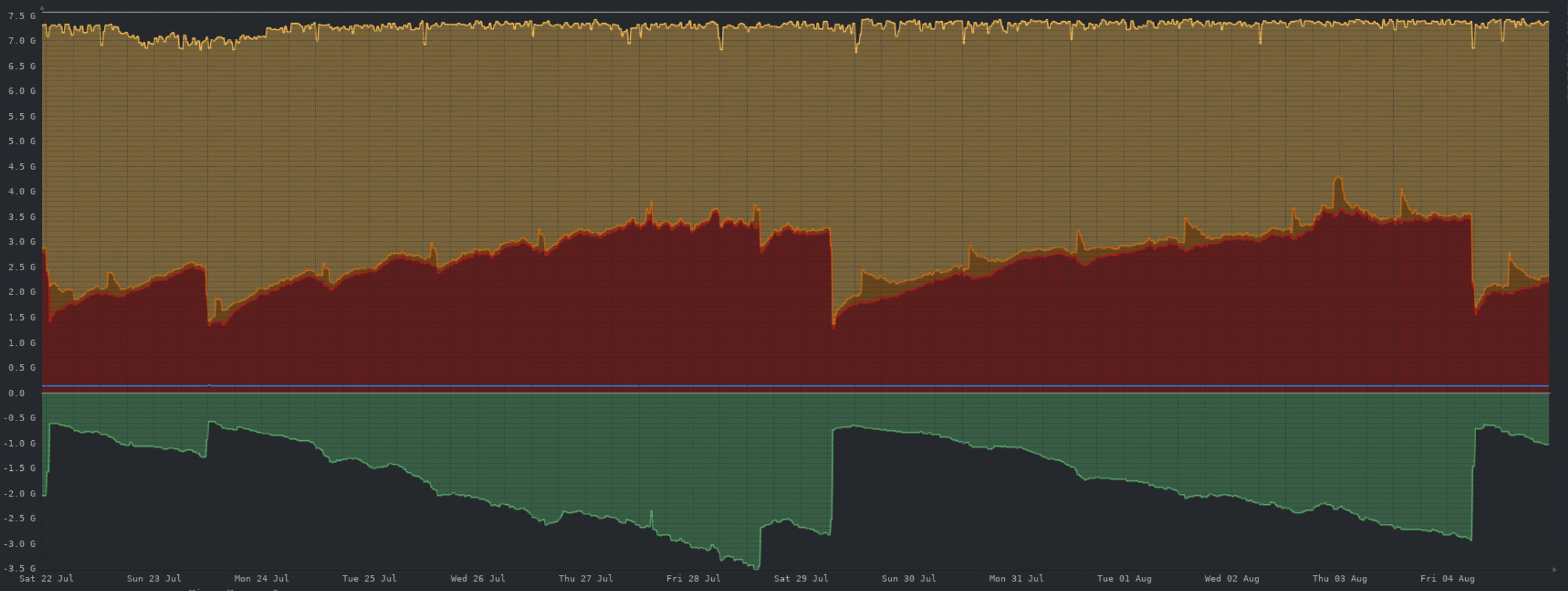

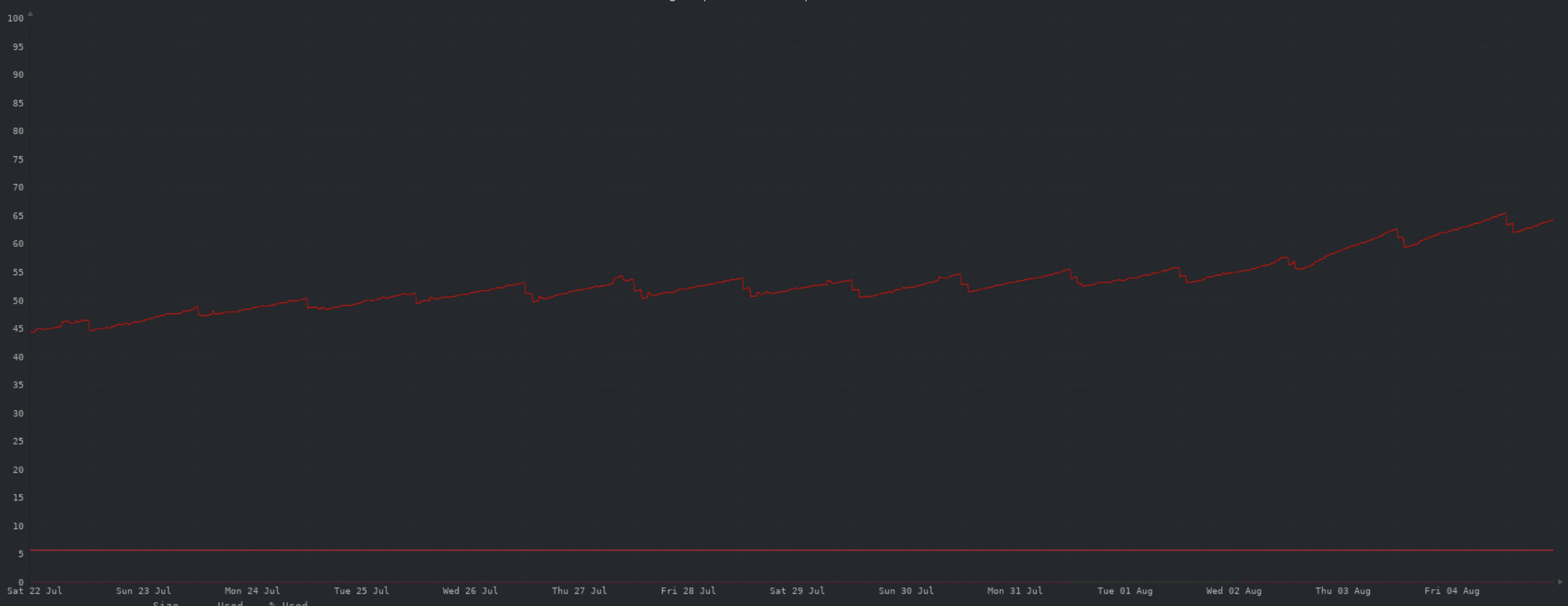

That took longer than expected... sorry about that.

The server is overdue for a reboot, I'll do it late tonight before I go to bed.. if I remember 😁

Seconded.

I agree wholeheartedly.

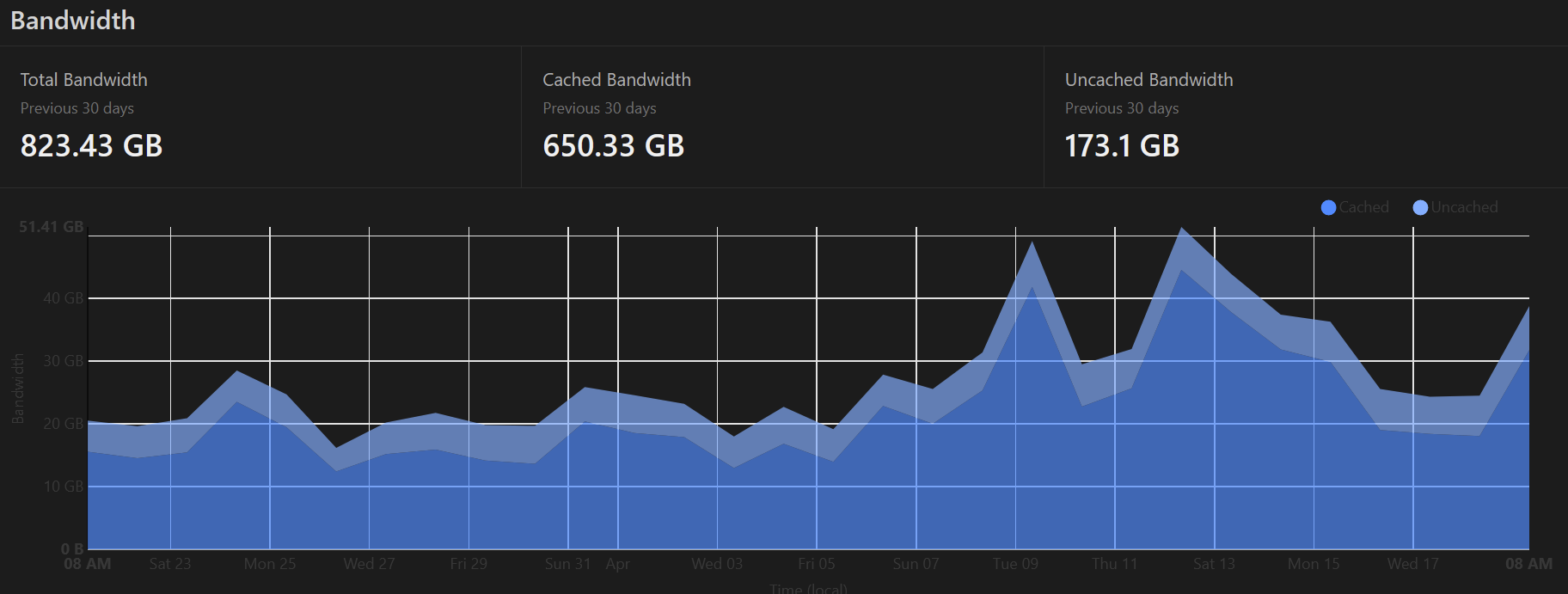

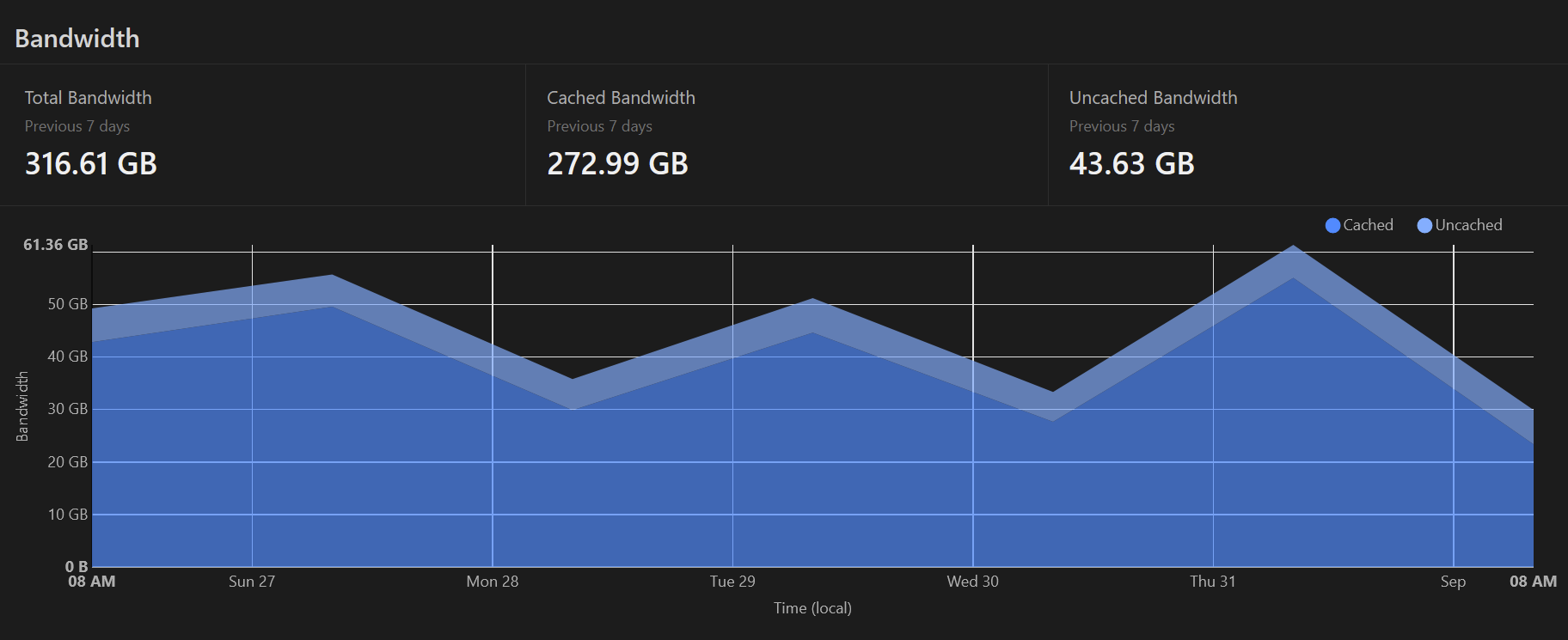

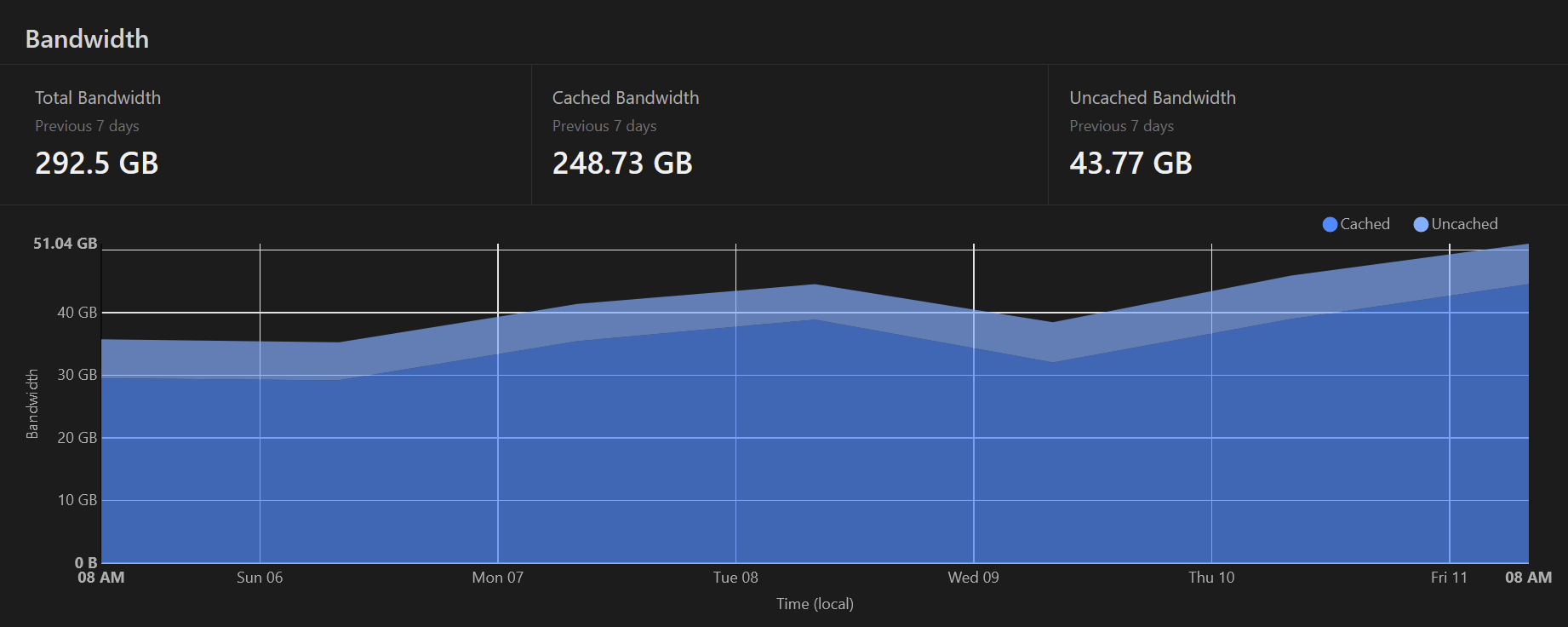

Cloudfront? No. Cloudflare? Yes 😀

Edit: affecting AZ I mean.

The native lemmy web interface works from mobile devices pretty well.

view more: next ›

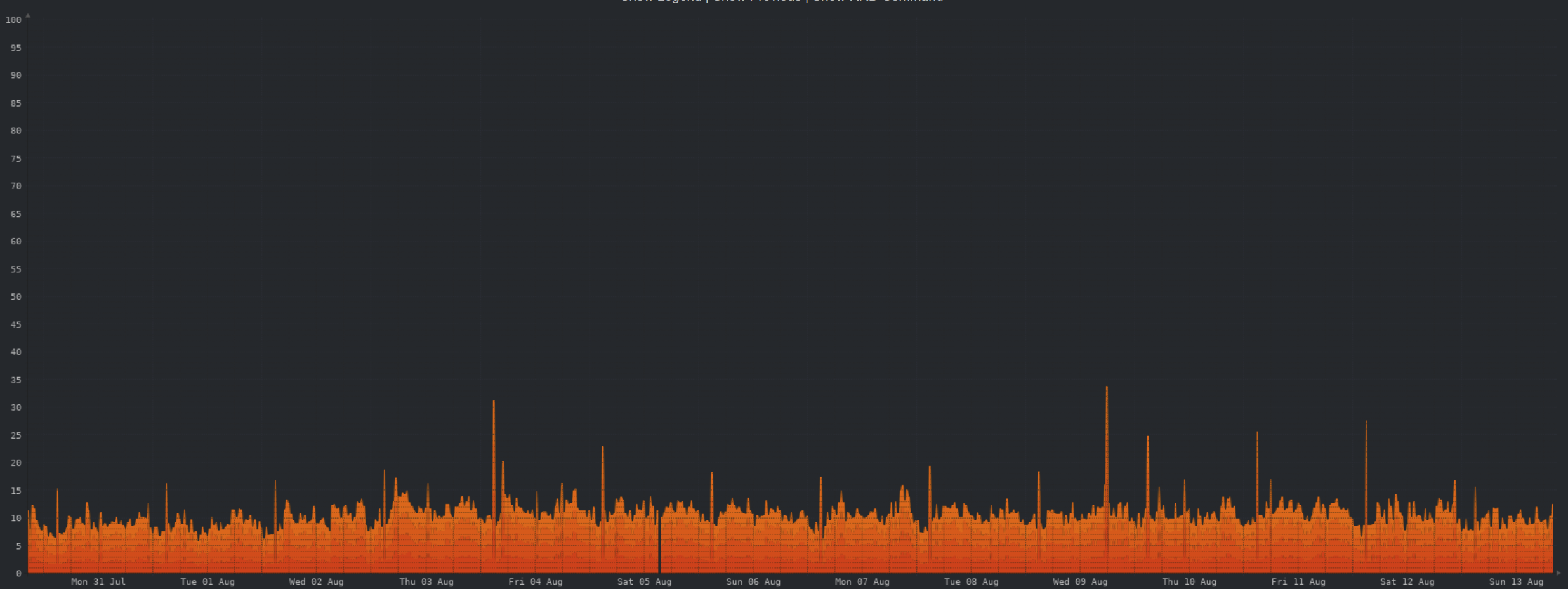

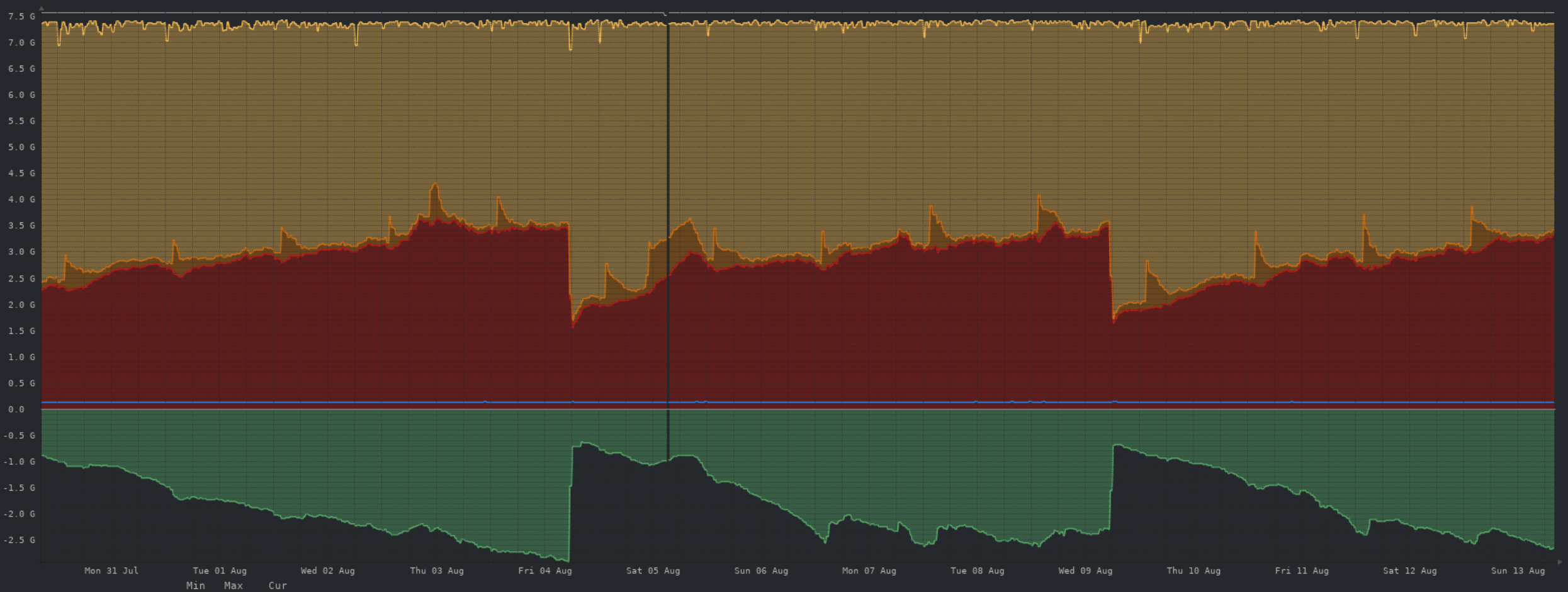

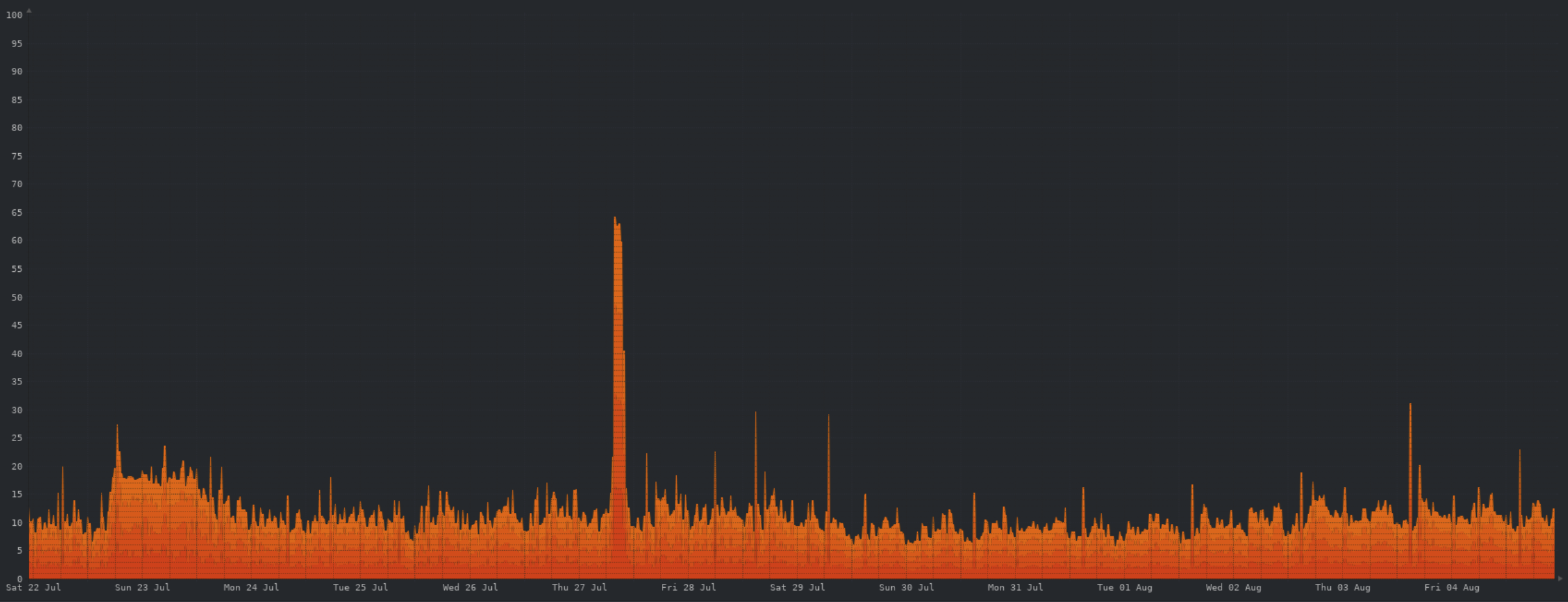

I suspect there are updates to pict-rs that may help. Will check tonight.