this post was submitted on 12 May 2024

471 points (85.8% liked)

linuxmemes

20880 readers

9 users here now

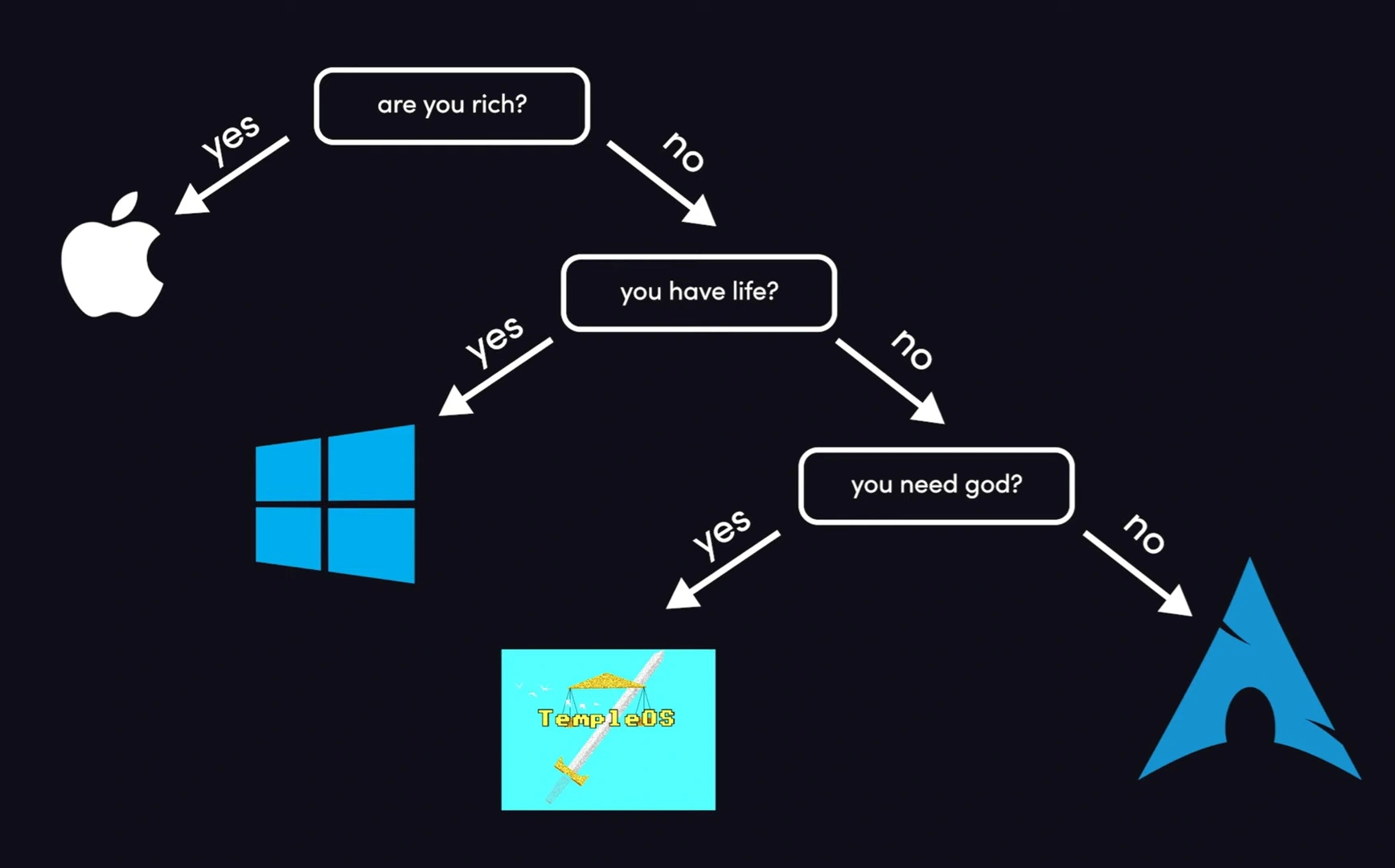

I use Arch btw

Sister communities:

- LemmyMemes: Memes

- LemmyShitpost: Anything and everything goes.

- RISA: Star Trek memes and shitposts

Community rules

- Follow the site-wide rules and code of conduct

- Be civil

- Post Linux-related content

- No recent reposts

Please report posts and comments that break these rules!

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

Do you have multiple monitors?

Yes - Don't buy a mac

No - Still don't buy a mac

I have a Mac with multiple monitors. It handles them a hell of a lot better than my PC at work.

I dont think it's even possible to use more than two monitors on a M series computer (maybe except if you spend extra for the max edition)

That is only the case on the base model chips. The Pro, Max, and Ultra chips all support multiple monitors.

Yeah, it's a ridiculous limitation.

It's still ridiculous to limit it.

Pretty much any modern computer should be able to output to more monitors than that.

There isn’t some software limitation here. It’s more that they only put two display controllers in the base level M-series chips. The vast, vast majority of users will have at most two displays. Putting more display controllers would add (minimal, but real) cost and complexity that most people won’t benefit from at all.

On the current gen base level chips, you can have one external display plus the onboard one, or close the laptop and have two externals. Seems like plenty to me for the cheapest option.

If true they are some pretty shitty chips.

Having two external monitors + the built it minor is extremely common.

At work almost everyone has at least two monitors because anything less sucks (a few use just a big external one plus the built in) and it's also common to also use the built in monitor for stuff like slack or teams.

Having more than two monitors isn't a "pro" feature. It's the norm nowadays.

Sure it might be enough for the cheapest option if the cheapest option was cheap. Unfortunately they are absolutely not cheap, and are in fact fairly expensive.

At work, my work PC laptop drives two 1080p monitors. I don’t keep it open to use the onboard one because Windows is so terrible at handling displays of different sizes, and the fans run so much when driving three displays that I think it could take off my desk. So I know what you’re talking about.

But. Have you ever used a Mac with two displays? A current-gen MacBook Air will drive a 6K@60Hz and a 5K@60Hz display when closed, and it’ll do it silently. Or both displays at “only” 4K if you want to crank the refrsh rate to over 100Hz. You think that’s not enough for the least expensive laptop they sell?

I’m really tired of people who don’t know what they’re capable of telling me why I shouldn’t enjoy using my computer.

Yeah people don't get that they are trading output quantity for output quality. You can't have both at the same time on lower end hardware. Maybe you could support both separately, but that's going to be more complex. Higher end hardware? Sure do whatever.

Not really. There is a compromise between output resolution, refresh rate, bit depth (think HDR), number of displays, and the overall system performance. Another computer might technically have more monitor output, but they probably sacrificed something to get there like resolution, HDR, power consumption or cost. Apple is doing 5K output with HDR on their lowest end chips. Think about that for a minute.

A lot of people like to blame AMD for high ideal power usage when they are running multi-monitor setups with different refresh rates and resolutions. Likewise I have seen Intel systems struggle to run a single 4K monitor because they were in single channel mode. Apple probably wanted to avoid those issues on their lower end chips which have much less bandwidth to play with.

There is no reason that they couldn't do 3 1080p monitors or more especially when the newer generation chips are supposedly so much faster than the generation before it.

Well yeah, no shit Sherlock. They could have done that in the first generation. It takes four 1080p monitors to equal the resolution of one 4K monitor. Apple though doesn't have a good enough reason to support many low res monitors. That's not their typical consumer base, who mostly use retina displays or other high res displays. Apple only sells high res displays. The display in the actual laptops is way above 1080p. In other words they chose quality over quantity as a design decision.

1080p is perfect for getting actual work done though.

And there is not reason why they couldn't allow you to have multiple normal res monitors. It's not a limitation to get you to overspend on a more expensive computer.

I mean, yeah, don’t ever buy a Mac, but what’s up with the multiple monitors? Do they struggle with it?

macOS out of the box fucking sucks for monitor scaling with third party monitors. It's honestly laughable for a modern OS. You can install some third party software that fixes it completely, but it really shouldn't be necessary. I use an (admittedly pretty strange) LG DualUp monitor as a secondary, and out of the box macOS can only make everything either extremely tiny, extremely large, or blurry.

Other than that, I've had no problems at all, and the window scaling between different DPI monitors is a lot smoother than it was with Windows previously.

The base model chips only supports 2 monitors. The Pro, Max, and Ultra chips all support multiple monitors.

They all support two monitors (one internal and one external for macbooks, and two external for desktops). It's not an artificial restriction. Each additional monitor needs a framebuffer. That's an actual circuit that needs to be present in the chip.

So they cheaped out on what is supposed to be a premium brand, gotcha

What percentage of people who buy the least expensive MacBook do you think are going to hook it up to more than two displays? Or should they add more display controllers that won’t ever be used and charge more for them? I feel like either way people who would never buy one will complain on behalf of people who are fine with them.

The least expensive MacBook is still $1000, closer to $1500 if you spec it with reasonable storage/ram. It really isn't that much of a stretch to add $100-300 for a 1080/1440p monitor or two at a desk.

Not necessarily. The base machines aren't that expensive, and this chip is also used in iPads. They support high resolution HDR output. The higher the number of monitors, resolution, bit depth, and refresh rate the more bandwidth is required for display output and the more complex and expensive the framebuffers are. Another system might support 3 or 4 monitors, but not support 5K output like the MacBooks do. I've seen Intel systems that struggled to even do a single 4K 60 FPS until I added another ram stick to make it dual channel. Apple do 5K output. Like sure they might technically support more monitors in theory, but in practice you will run into limitations if those monitors require too much bandwidth.

Oh yeah and these systems also need to share bandwidth between the framebuffers, CPU, and GPU. It's no wonder they didn't put 3 or more very high resolution buffers into the lower end chips which have less bandwidth than the higher end ones. Even if it did work the performance impacts probably aren't worth it for a small number of users.

TIL, thanks! 🌝

I use a Plugable docking station with DisplayLink with a base-level M1 MacBook Air and it handles multiple (3x 1080p) displays perfectly. My (limited) understanding is that they do that just using a driver. So at a basic level, couldn't Apple include driver support for multiple monitors natively, seeing as it has adequate bandwidth in practice?

Sigh. It's not just a fricking driver. It's an entire framebuffer you plug into a USB or Thunderbolt port. That's why they are more expensive, and why they even need a driver.

A 1080p monitor has one quarter of the pixels of a 4K monitor. The necessary bandwidth increases with the pixels required. Apple chooses instead to use the bandwidth they have to support 2 5K and 6K monitors, instead of supporting say 8 or 10 1080p monitors. That's a design decision that they probably thought made sense for the product they wanted to produce. Honestly I agree with them for the most part. Most people don't run 8 monitors, very few have even 3, and those that do can just buy the higher end model or get an adapter like you did. If you are the kind of person to use 3 monitors you probably also want the extra performance.

Thank you for taking the time to reply, and for further sharing your expertise to our conversation! I understand different resolutions, that the docking station has its own chipset, and why the Plugable is more expensive than other docking stations as a result. I now have a more nuanced understanding of frame-buffers and how DisplayLink interfaces with an OS like MacOS.

Allow me to clarify the point I tried to make (and admittedly, I didn't do a good job of expressing it previously). Rather than focusing on the technical specs, I had intended to have a more general conversation about design decisions and Apple's philosophy. They know that consumers will want to hook up a base tier MacBook Air to two external displays, and intentionally chose not to build-in an additional frame-buffer to force users to spend more. I sincerely doubt there's any cost-saving for the customer because Apple doesn't include that out of the box.

Apple's philosophy has always been that they know what's best for their users. If a 2020 M1 MacBook Air supports both the internal 2K display and a single external 6K display, that suggests to me it should have the horsepower to drive two external 1080p displays (that's just a feeling I have, not a known fact). And I'll acknowledge that Apple has improved this limitation for the newer MBAs, which allow you to disable the built-in display and use two external displays.

My broader point is that Apple "knows what's best" for their users: they want customers to buy an Apple display rather than to just stick with the 1080p LCDs they already own, because they're not Retina®. Which do you honestly think is a more common use-case for a MacBook Air user: wanting to connect to two monitors (home office, University classroom system, numerous board room settings I've worked in, etc), or to connect their $1200 MBA to a $1600-$2300+ Studio Display? For that, anyone with an iota of common sense would be using a MBP etc since they're likely a creative professional who would want the additional compute and graphics power for photo/video-editing, etc.

I don't disagree with your explanation of the thought-process behind why Apple may have made this hardware decision for MBAs, but it is effectively an arbitrary, non cost-saving decision that will certainly impede customers who expect two displays to just work, since they can do that on their 10-year-old Toshiba Satellite or w/e.

Thanks, and have a great day

It's not just about Retina displays. High res and HDR isn't uncommon anymore. Pretty much all new TVs anybody would want to buy will be 4K. It has to support the Apple 5K display anyway because that's one of their products.

As we've discussed two external displays are supported on the new macbook base models. It was a bit of an oversight on the original sure, but that's been fixed now.

Also the same SoCs is used in iPads. It's not mac only. I can't imagine wanting three displays on an ipad.

For me it's that compared to windows and linux, handling multiple windows between screens is always problematic, and is made worse by alt-tab bringing up all the windows for an application, which means they pop up in the other monitors too which isn't usually what I want. Maximizing is usually not as straightforward as one would hope, and the dock moves to any window if you leave your pointer at the bottom which can get annoying fast. As some point out apparently there's 3rd party software that allows you to fix these issues, but that's not an option for me because I use a locked-down Mac for work and can't install 3rd party software, so I'm stuck with the annoying base behavior.