this post was submitted on 02 Mar 2025

731 points (99.1% liked)

Science Memes

12579 readers

2596 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- !reptiles and [email protected]

Physical Sciences

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

- [email protected]

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and [email protected]

- [email protected]

- !self [email protected]

- [email protected]

- [email protected]

- [email protected]

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

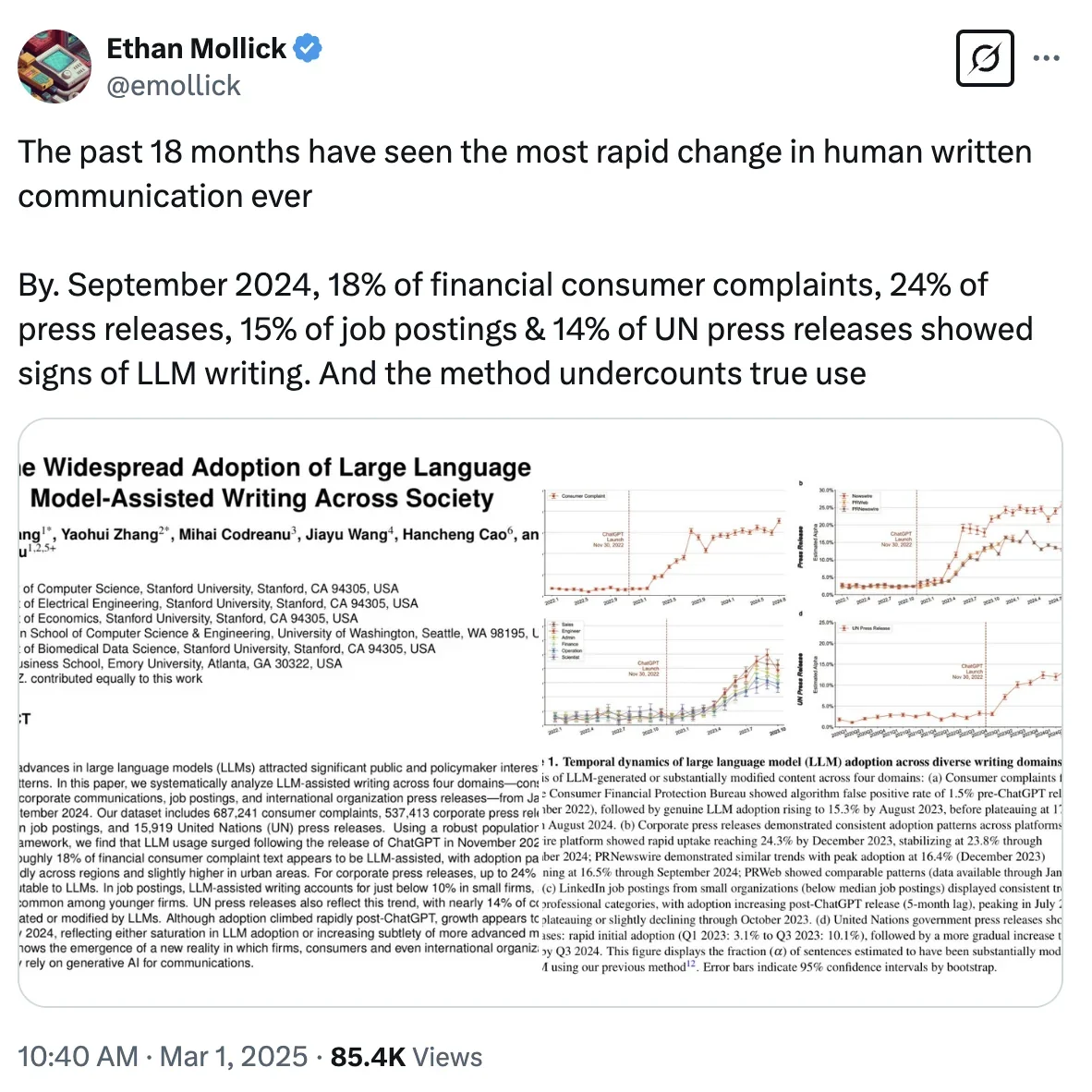

They developed their own detector described in another paper. Basically, this reverse-engineers texts based on their vocabulary to provide an estimate on how much of them were ChatGPT.

This sounds plausible to me, as specific models (or even specific families) do tend to have the same vocabulary/phrase biases and “quirks.” There are even some community “slop filters” used for sampling specific models, filled with phrases they’re known to overuse through experience, with “shivers down her spine” being a meme for Anthropic IIRC.

It’s defeatable. But the “good” thing is most LLM writing is incredibly lazy, not meticulously crafted to avoid detection.

This is yet another advantage of self hosted LLMs as they:

Tend to have different quirks than closed models.

Have finetunes with the explicit purpose of removing their slop.

Can use exotic sampling that “bans” whatever list of phrases you specify (aka the LLM backtracks and redoes it when it runs into them, which is not normally a feature you get over APIs), or penalizes repeated phrases (aka DRY sampling, again not a standard feature).