this post was submitted on 18 May 2024

260 points (98.5% liked)

xkcd

8632 readers

1 users here now

A community for a webcomic of romance, sarcasm, math, and language.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

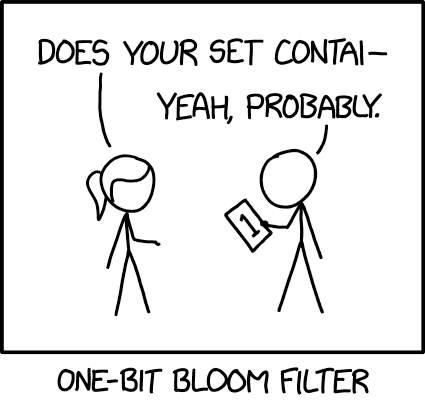

Well, yes and no. With a straight-up hash set, you're keeping

set_size * bits_per_elementbits plus whatever the overhead of the hash table is in memory, which might not be tenable for very large sets, but with a Bloom filter that has eg. ~1% false positive rate and an ideal k parameter (number of hash functions, see eg. the Bloom filter wiki article) you're only keeping ~10 bits per element completely regardless of element size because they don't store the elements themselves or even their full hashes – they only tell you whether some element is probably in the set or not, but you can't eg. enumerate the elements in the set. As an example of memory usage, a Bloom filter that has a false positive rate of ~1% for 500 million elements would need 571 MiB (noting that although the size of the filter doesn't grow when you insert elements, the false positive rate goes up once you go past that 500 million element count.)Lookup and insertion time complexity for a Bloom filter is O(k) where k is the parameter I mentioned and a constant – ie. effectively O(1).

Probabilistic set membership queries are mainly useful when you're dealing with ginormous sets of elements that you can't shove into a regular in-memory hash set. A good example in the wiki article is CDN cache filtering: