New-ish to Haskell. Can't figure out the best way to get Cassava (Data.Csv) to do what I want. Can't tell if I'm missing some haskell type idioms or common knowledge or what.

Task: I need to read in a CSV, but I don't know what the headers/columns are going to be ahead of time. The user will provide input to say which headers from the CSV they want processed, but I won't know where (index-wise) those columns will be in the CSV, nor how many total columns there will be (either specified by the user or total). Say I have a [String] which lists the headers they want.

Cassava is able to read CSVs with and without headers.

Without headers Cassava can read in entire rows, even if it doesn't know how many columns are in that row. But then I wouldn't have the header data to filter for the values that I need.

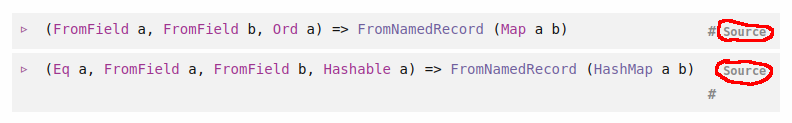

With headers Cassava requires(?) you to define a record type instantiating its FromNamedRecord typeclass, which is how you access parts of the column by name (using the record fields). But in order for this to be well defined you need to know ahead of time everything about the headers: their names, their quantity, and their order. You then emulate that in your record type.

Hopefully I'm missing something obvious, but it feels a lot like I have my hands tied behind my back dealing with the types provided by Cassava.

Help greatly appreciated :)