You can do quite a bit with 4GB RAM. A lot of people use VPSes with 4GB (or less) RAM for web hosting, small database servers, backups, etc. Big providers like DigitalOcean tend to have 1GB RAM in their lowest plans.

Selfhosted

A place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don't control.

Rules:

-

Be civil: we're here to support and learn from one another. Insults won't be tolerated. Flame wars are frowned upon.

-

No spam posting.

-

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it's not obvious why your post topic revolves around selfhosting, please include details to make it clear.

-

Don't duplicate the full text of your blog or github here. Just post the link for folks to click.

-

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

-

No trolling.

Resources:

- selfh.st Newsletter and index of selfhosted software and apps

- awesome-selfhosted software

- awesome-sysadmin resources

- Self-Hosted Podcast from Jupiter Broadcasting

Any issues on the community? Report it using the report flag.

Questions? DM the mods!

your hardware ain't shit until it's a first gen core2duo in a random Dell office PC and 2gb of memory that you specifically only use just because it's a cheaper way to get x86 when you can't use your raspberry pi.

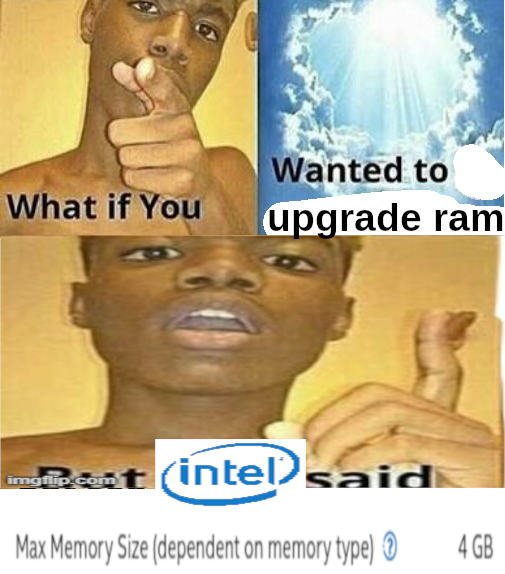

Also they lie most of the time and it may technically run fine on more memory, especially if it's older when dimm capacities were a lot lower than they can be now. It just won't be "supported".

I used to selfhost on a core 2 duo thinkpad R60i. It had a broken fan so I had to hide it into a storage room otherwise it would wake up people from sleep during the night making weird noises. It was pretty damn slow. Even opening proxmox UI in the remotely took time. KrISS feed worked pretty well tho.

I have since upgraded to... well, nothing. The fan is KO now and the laptop won't boot. It's a shame because not having access to radicale is making my life more difficult than it should be. I use CalDAV from disroot.org but it would be nice to share a calendar with my family too.

3x Intel NUC 6th gen i5 (2 cores) 32gb RAM. Proxmox cluster with ceph.

I just ignored the limitation and tried with a single sodim of 32gb once (out of a laptop) and it worked fine, but just backed to 2x16gb dimms since the limit was still 2core of CPU. Lol.

Running that cluster 7 or so years now since I bought them new.

I suggest only running off shit tier since three nodes gives redundancy and enough performance. I've run entire proof of concepts for clients off them. Dual domain controllers and FC Rd gateway broker session hosts fxlogic etc. Back when Ms only just bought that tech. Meanwhile my home "ARR" just plugs on in docker containers. Even my opnsense router is virtual running on them. Just get a proper managed switch and take in the internet onto a vlan into the guest vm on a separate virtual NIC.

Point is, it's still capable today.

How is ceph working out for you btw? I'm looking into distributed storage solutions rn. My usecase is to have a single unified filesystem/index, but to store the contents of the files on different machines, possibly with redundancy. In particular, I want to be able to upload some files to the cluster and be able to see them (the directory structure and filenames) even when the underlying machine storing their content goes offline. Is that a valid usecase for ceph?

I'm far from an expert sorry, but my experience is so far so good (literally wizard configured in proxmox set and forget) even during a single disk lost. Performance for vm disks was great.

I can't see why regular file would be any different.

I have 3 disks, one on each host, with ceph handling 2 copies (tolerant to 1 disk loss) distributed across them. That's practically what I think you're after.

I'm not sure about seeing the file system while all the hosts are all offline, but if you've got any one system with a valid copy online you should be able to see. I do. But my emphasis is generally get the host back online.

I'm not 100% sure what you're trying to do but a mix of ceph as storage remote plus something like syncthing on a endpoint to send stuff to it might work? Syncthing might just work without ceph.

I also run zfs on an 8 disk nas that's my primary storage with shares for my docker to send stuff, and media server to get it off. That's just truenas scale. That way it handles data similarly. Zfs is also very good, but until scale came out, it wasn't really possible to have the "add a compute node to expand your storage pool" which is how I want my vm hosts. Zfs scale looks way harder than ceph.

Not sure if any of that is helpful for your case but I recommend trying something if you've got spare hardware, and see how it goes on dummy data, then blow it away try something else. See how it acts when you take a machine offline. When you know what you want, do a final blow away and implement it with the way you learned to do it best.

Not sure if any of that is helpful for your case but I recommend trying something if you’ve got spare hardware, and see how it goes on dummy data, then blow it away try something else.

This is good advice, thanks! Pretty much what I'm doing right now. Already tried it with IPFS, and found that it didn't meet my needs. Currently setting up a tahoe-lafs grid to see how it works. Will try out ceph after this.

Running a bunch of services here on a i3 PC I built for my wife back in 2010. I've since upgraded the RAM to 16GB, added as many hard drives as there are SATA ports on the mobo, re-bedded the heatsink, etc.

It's pretty much always ran on Debian, but all services are on Docker these days so the base distro doesn't matter as much as it used to.

I'd like to get a good backup solution going for it so I can actually use it for important data, but realistically I'm probably just going to replace it with a NAS at some point.

It's not absolutely shit, it's a Thinkpad t440s with an i7 and 8gigs of RAM and a completely broken trackpad that I ordered to use as a PC when my desktop wasn't working in 2018. Started with a bare server OS then quickly realized the value of virtualization and deployed Proxmox on it in 2019. Have been using it as a modest little server ever since. But I realize it's now 10 years old. And it might be my server for another 5 years, or more if it can manage it.

In the host OS I tweaked some value to ensure the battery never charges over 80%. And while I don't know exactly how much electricity it consumes on idle, I believe it's not too much. Works great for what I want. The most significant issue is some error message that I can't remember the text of that would pop up, I think related to the NIC. I guess Linux and the NIC in this laptop have/had some kind of mutual misunderstanding.

Yeah, absolutely. Same here, I find used laptops often make GREAT homelab systems, and ones with broken screens/mice/keyboards can be even better since you can get them CHEAP and still fully use them.

I have 4 doing various things including one acting as my "desktop" down in the homelab. But they're between 4 and 14 years old and do a great job for what they're used for.

I've got a i3-10100, 16gb ram, and an unused gtx 960. It's terrible but its amazing at the same time. I built it as a gaming pc then quit gaming.

I'm still interested in Self-Hosting but I actually tried getting into self-hosting a year or so ago. I bought a s***** desktop computer from Walmart, and installed window server 2020 on it to try to practice on that.

Thought I could use it to put some bullet points on my resume, and maybe get into self hosting later with next cloud. I ended up not fully following through because I felt like I needed to first buy new editions of the server administration and network infrastructure textbooks I had learned from a decade prior, before I could continue with giving it an FQDN, setting it up as a primary DNS Server, or pointing it at one, and etc.

So it was only accessible on my LAN, because I was afraid of making it a remotely accessible server unless I knew I had good firewall rules, and had set up the primary DNS server correctly, and ultimately just never finished setting it up. The most ever accomplished was getting it working as a file server for personal storage, and creating local accounts with usernames and passwords for both myself and my mom, whom I was living with at the time. It could authenticate remote access through our local Wi-Fi, but I never got further.

Yup. Gateway E-475M. It has trouble transcoding some plex streams, but it keeps chugging along. $5 well spent.

it can do it!

... just not today

got a ripping and converting pc that ain't any better. it's all it does, so speed don't matter any. hb has queue, so nbd. i just let it go... and go... and go...

My NAS is on an embedded Xeon that at this point is close to a decade old and one of my proxmox boxes is on an Intel 6500t. I'm not really running anything on any really low spec machines anymore, though earlyish in the pandemic I was running boinc with the Open Pandemics project on 4 raspberry pis.

I'm self-hosting in a 500GB HDD, 2 cores AMD A6, 8GB RAM thinkcentre (access for LAN only) that I got very cheap.

It could be better, I'm going to buy a new computer for personal use and I'm the only one in my family who uses the hosted services, so upgrades will come later 😴